Deep Adversarial Koopman Model for Reaction-Diffusion systems

Paper and Code

Jun 09, 2020

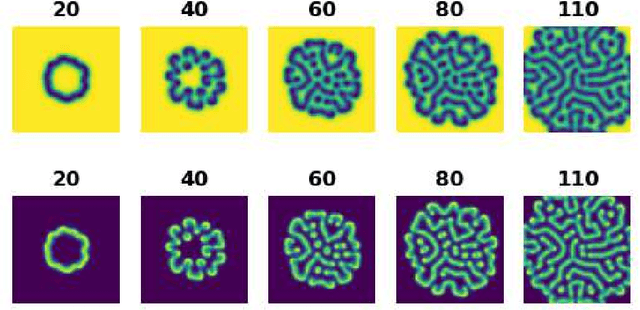

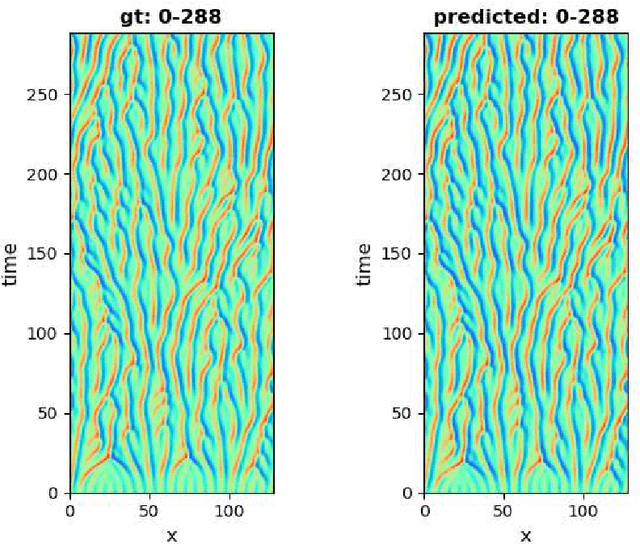

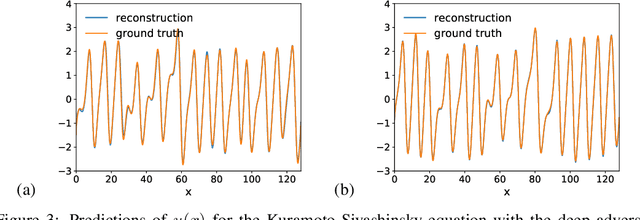

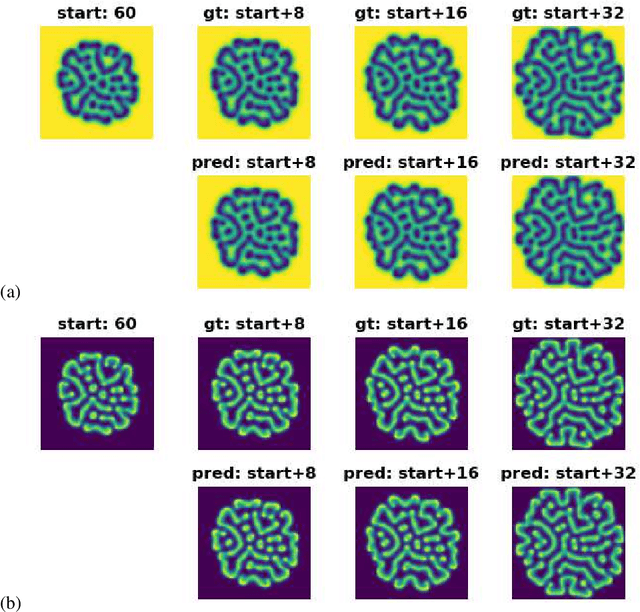

Reaction-diffusion systems are ubiquitous in nature and in engineering applications, and are often modeled using a non-linear system of governing equations. While robust numerical methods exist to solve them, deep learning-based reduced ordermodels (ROMs) are gaining traction as they use linearized dynamical models to advance the solution in time. One such family of algorithms is based on Koopman theory, and this paper applies this numerical simulation strategy to reaction-diffusion systems. Adversarial and gradient losses are introduced, and are found to robustify the predictions. The proposed model is extended to handle missing training data as well as recasting the problem from a control perspective. The efficacy of these developments are demonstrated for two different reaction-diffusion problems: (1) the Kuramoto-Sivashinsky equation of chaos and (2) the Turing instability using the Gray-Scott model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge