Decision Variance in Online Learning

Paper and Code

Jul 24, 2018

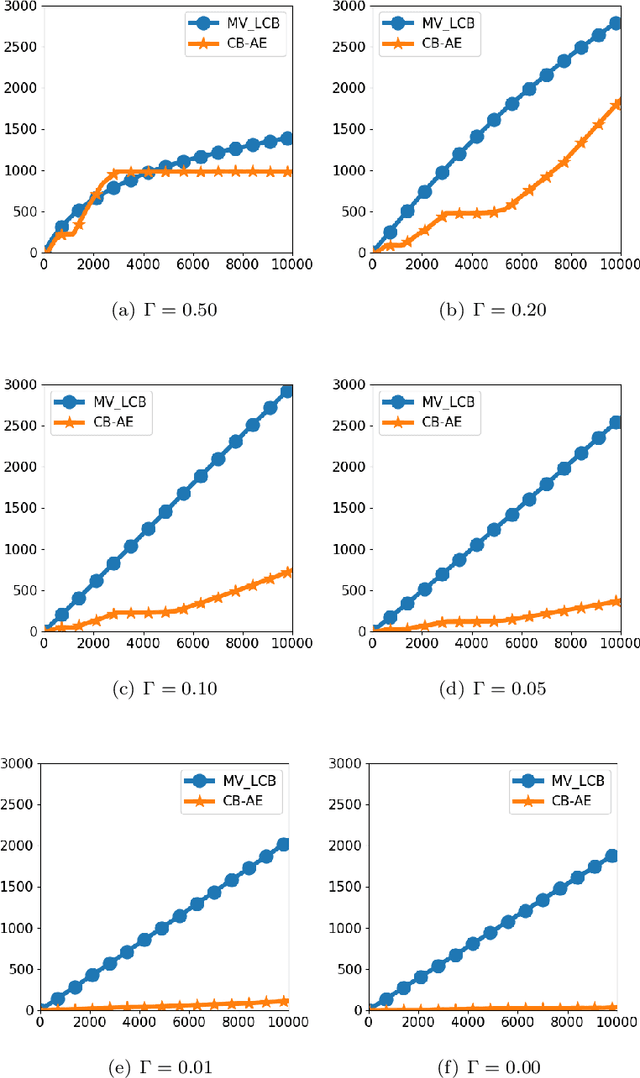

Online learning has classically focused on the expected behaviour of learning policies. Recently, risk-averse online learning has gained much attention. In this paper, a risk-averse multi-armed bandit problem where the performance of policies is measured based on the mean-variance of the rewards is studied. The variance of the rewards depends on the variance of the underlying processes as well as the variance of the player's decisions. The performance of two existing policies is analyzed and new fundamental limitations on risk-averse learning is established. In particular, it is shown that although an $\mathcal{O}(\log T)$ distribution-dependent regret in time $T$ is achievable (similar to the risk-neutral setting), the worst-case (i.e. minimax) regret is lower bounded by $\Omega(T)$ (in contrast to the $\Omega(\sqrt{T})$ lower bound in the risk-neutral setting). The lower bound results are even stronger in the sense that they are proven for the case of online learning with full feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge