Decentralized MCTS via Learned Teammate Models

Paper and Code

Mar 19, 2020

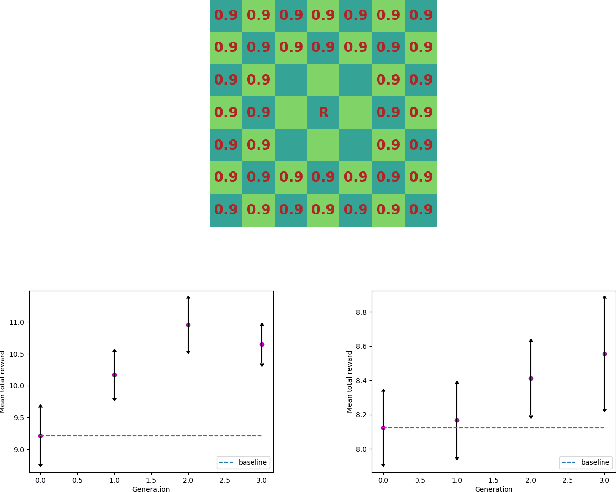

A key difficulty of cooperative decentralized planning lies in making accurate predictions about the decisions of other agents. In this paper we present a policy improvement operator for learning to plan in iterated cooperative multi-agent scenarios. At each application of our method, a selected agent learns an approximation of policies of its teammates from data from past simulations. Under the assumption of ideal function approximation, successive iterations of our algorithm are guaranteed to improve the policies, and eventually lead to convergence to a Nash equilibrium in a coordinate ascent manner. We combine the policy improvement operator with the decentralized Monte Carlo Tree Search planning method and demonstrate the application of the algorithm on several scenarios in the spatial task allocation problem introduced in (Claes et al., 2015). We show that deep learning and convolutional neural networks can be efficiently employed to produce policy approximators which exploit the spatial features of the problem, and that the proposed algorithm improves over the baseline planning performance for particularly challenging domain configurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge