DDPG car-following model with real-world human driving experience in CARLA

Paper and Code

Dec 29, 2021

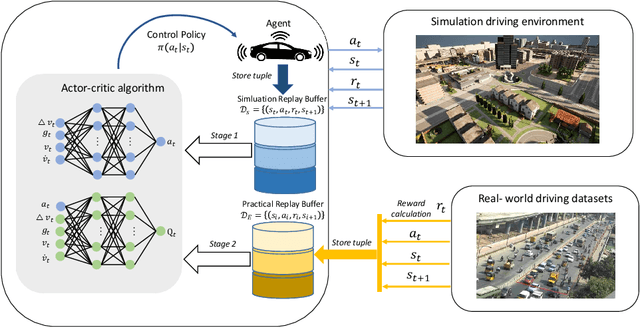

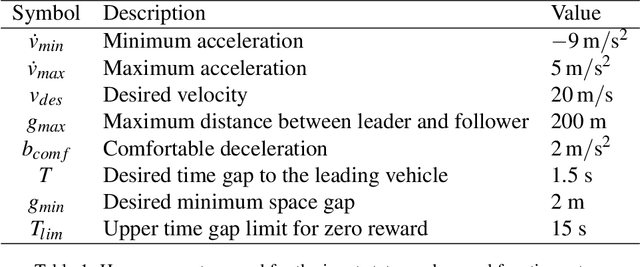

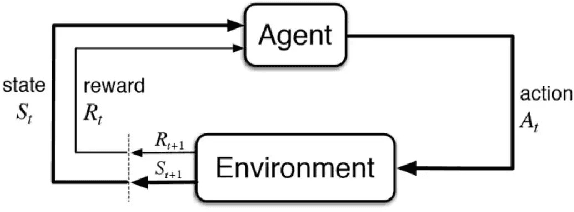

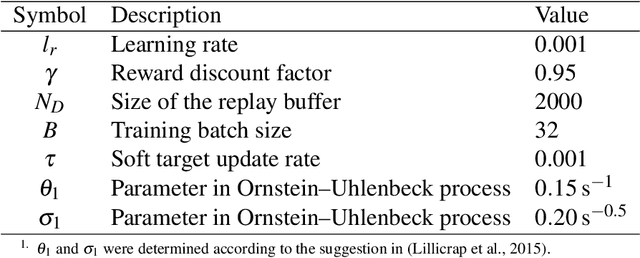

In the autonomous driving field, the fusion of human knowledge into Deep Reinforcement Learning (DRL) is often based on the human demonstration recorded in the simulated environment. This limits the generalization and the feasibility of application in real-world traffic. We proposed a two-stage DRL method, that learns from real-world human driving to achieve performance that is superior to the pure DRL agent. Training a DRL agent is done within a framework for CARLA with Robot Operating System (ROS). For evaluation, we designed different real-world driving scenarios to compare the proposed two-stage DRL agent with the pure DRL agent. After extracting the 'good' behavior from the human driver, such as anticipation in a signalized intersection, the agent becomes more efficient and drives safer, which makes this autonomous agent more adapt to Human-Robot Interaction (HRI) traffic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge