DCANet: Dense Context-Aware Network for Semantic Segmentation

Paper and Code

Apr 06, 2021

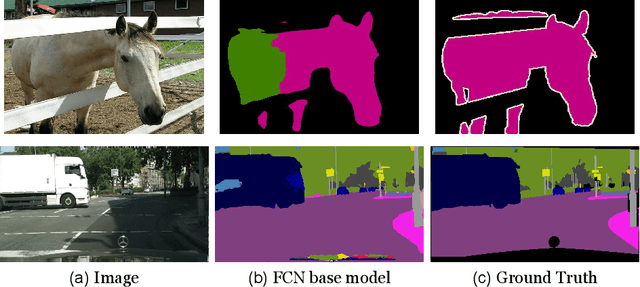

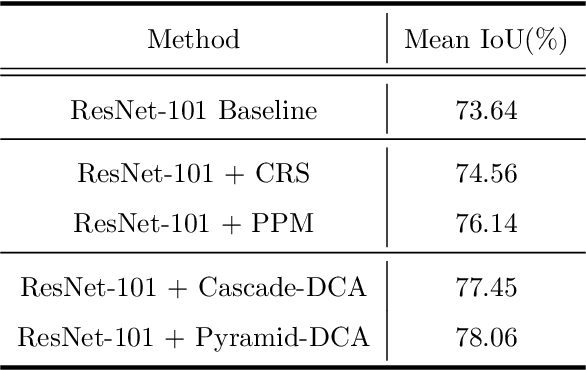

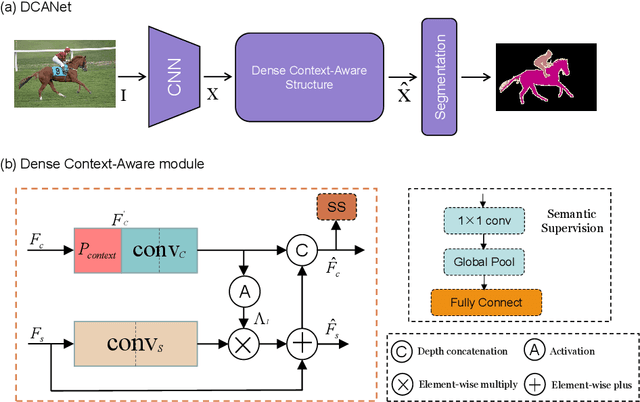

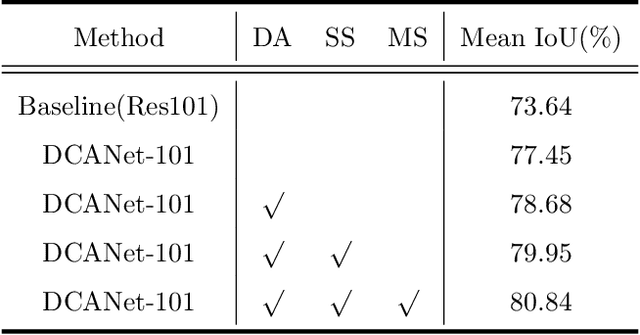

As the superiority of context information gradually manifests in advanced semantic segmentation, learning to capture the compact context relationship can help to understand the complex scenes. In contrast to some previous works utilizing the multi-scale context fusion, we propose a novel module, named Dense Context-Aware (DCA) module, to adaptively integrate local detail information with global dependencies. Driven by the contextual relationship, the DCA module can better achieve the aggregation of context information to generate more powerful features. Furthermore, we deliberately design two extended structures based on the DCA modules to further capture the long-range contextual dependency information. By combining the DCA modules in cascade or parallel, our networks use a progressive strategy to improve multi-scale feature representations for robust segmentation. We empirically demonstrate the promising performance of our approach (DCANet) with extensive experiments on three challenging datasets, including PASCAL VOC 2012, Cityscapes, and ADE20K.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge