Data generation using simulation technology to improve perception mechanism of autonomous vehicles

Paper and Code

Jul 01, 2022

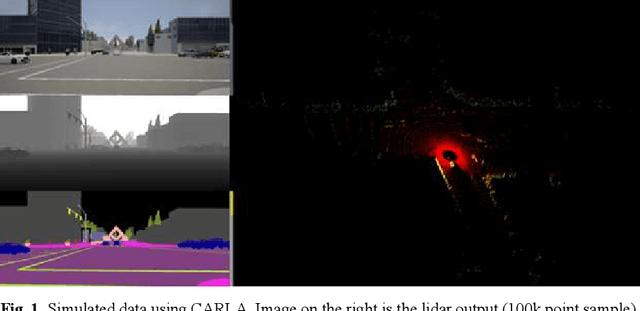

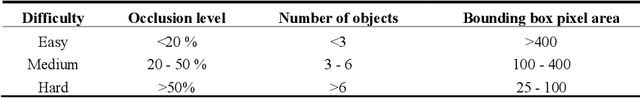

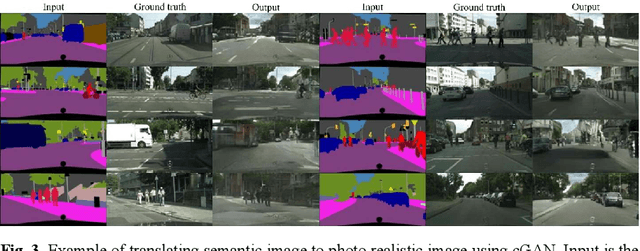

Recent advancements in computer graphics technology allow more realistic ren-dering of car driving environments. They have enabled self-driving car simulators such as DeepGTA-V and CARLA (Car Learning to Act) to generate large amounts of synthetic data that can complement the existing real-world dataset in training autonomous car perception. Furthermore, since self-driving car simulators allow full control of the environment, they can generate dangerous driving scenarios that the real-world dataset lacks such as bad weather and accident scenarios. In this paper, we will demonstrate the effectiveness of combining data gathered from the real world with data generated in the simulated world to train perception systems on object detection and localization task. We will also propose a multi-level deep learning perception framework that aims to emulate a human learning experience in which a series of tasks from the simple to more difficult ones are learned in a certain domain. The autonomous car perceptron can learn from easy-to-drive scenarios to more challenging ones customized by simulation software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge