Cyclist Trajectory Forecasts by Incorporation of Multi-View Video Information

Paper and Code

Jun 30, 2021

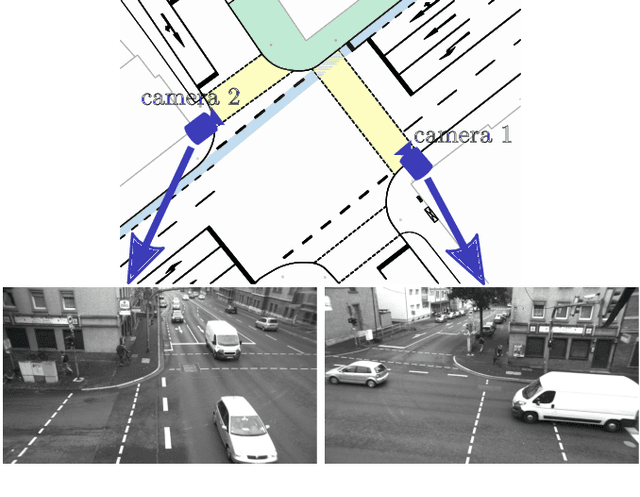

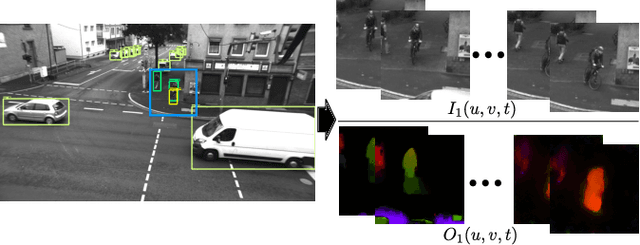

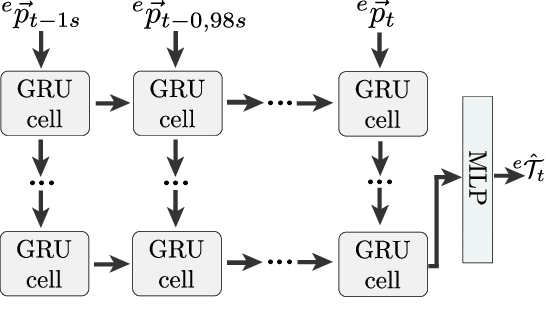

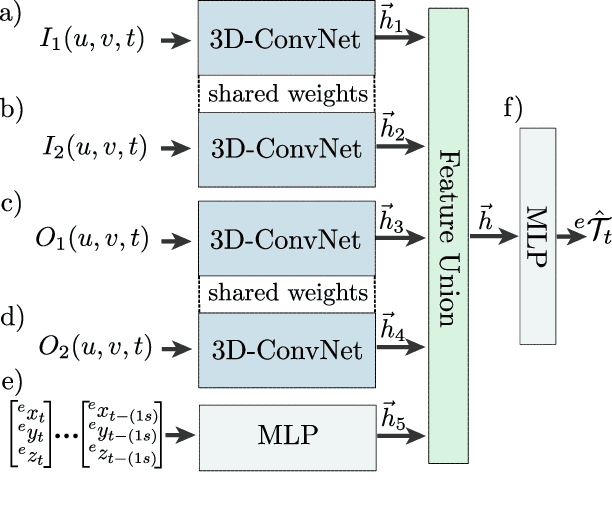

This article presents a novel approach to incorporate visual cues from video-data from a wide-angle stereo camera system mounted at an urban intersection into the forecast of cyclist trajectories. We extract features from image and optical flow (OF) sequences using 3D convolutional neural networks (3D-ConvNet) and combine them with features extracted from the cyclist's past trajectory to forecast future cyclist positions. By the use of additional information, we are able to improve positional accuracy by about 7.5 % for our test dataset and by up to 22 % for specific motion types compared to a method solely based on past trajectories. Furthermore, we compare the use of image sequences to the use of OF sequences as additional information, showing that OF alone leads to significant improvements in positional accuracy. By training and testing our methods using a real-world dataset recorded at a heavily frequented public intersection and evaluating the methods' runtimes, we demonstrate the applicability in real traffic scenarios. Our code and parts of our dataset are made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge