CUBE360: Learning Cubic Field Representation for Monocular 360 Depth Estimation for Virtual Reality

Paper and Code

Oct 08, 2024

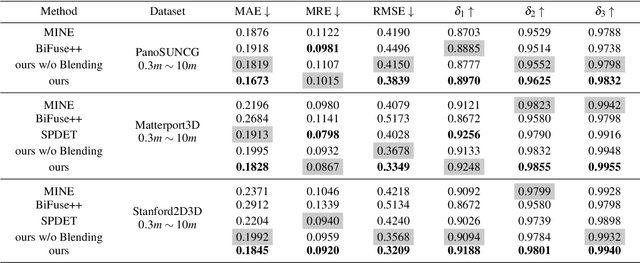

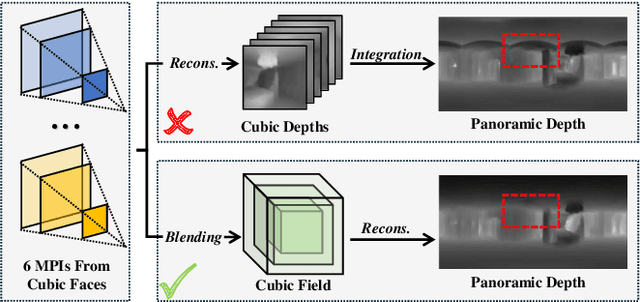

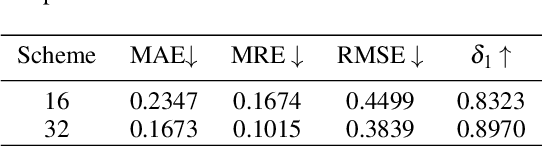

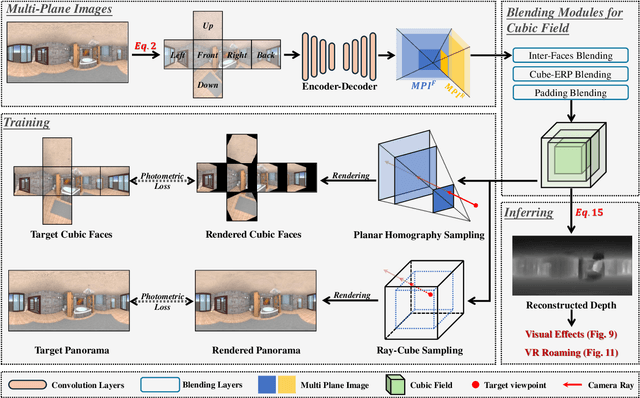

Panoramic images provide comprehensive scene information and are suitable for VR applications. Obtaining corresponding depth maps is essential for achieving immersive and interactive experiences. However, panoramic depth estimation presents significant challenges due to the severe distortion caused by equirectangular projection (ERP) and the limited availability of panoramic RGB-D datasets. Inspired by the recent success of neural rendering, we propose a novel method, named $\mathbf{CUBE360}$, that learns a cubic field composed of multiple MPIs from a single panoramic image for $\mathbf{continuous}$ depth estimation at any view direction. Our CUBE360 employs cubemap projection to transform an ERP image into six faces and extract the MPIs for each, thereby reducing the memory consumption required for MPI processing of high-resolution data. Additionally, this approach avoids the computational complexity of handling the uneven pixel distribution inherent to equirectangular projectio. An attention-based blending module is then employed to learn correlations among the MPIs of cubic faces, constructing a cubic field representation with color and density information at various depth levels. Furthermore, a novel sampling strategy is introduced for rendering novel views from the cubic field at both cubic and planar scales. The entire pipeline is trained using photometric loss calculated from rendered views within a self-supervised learning approach, enabling training on 360 videos without depth annotations. Experiments on both synthetic and real-world datasets demonstrate the superior performance of CUBE360 compared to prior SSL methods. We also highlight its effectiveness in downstream applications, such as VR roaming and visual effects, underscoring CUBE360's potential to enhance immersive experiences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge