CReaM: Condensed Real-time Models for Depth Prediction using Convolutional Neural Networks

Paper and Code

Jul 24, 2018

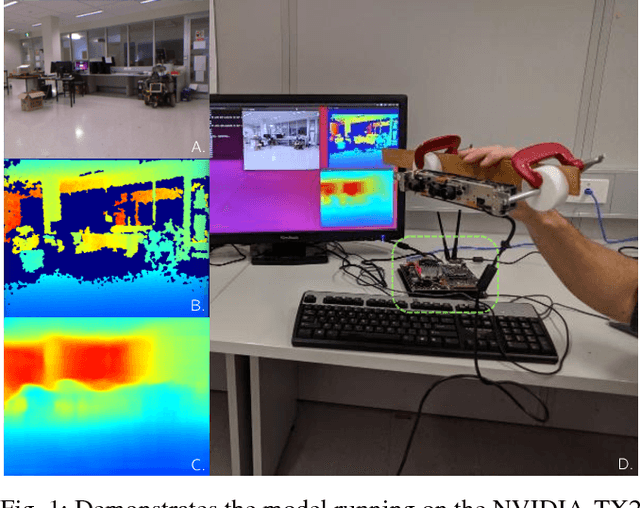

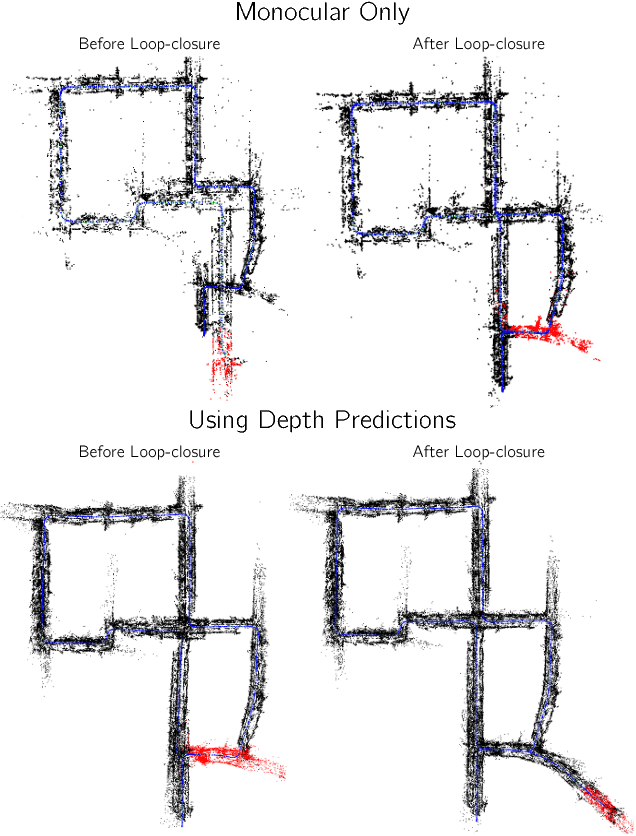

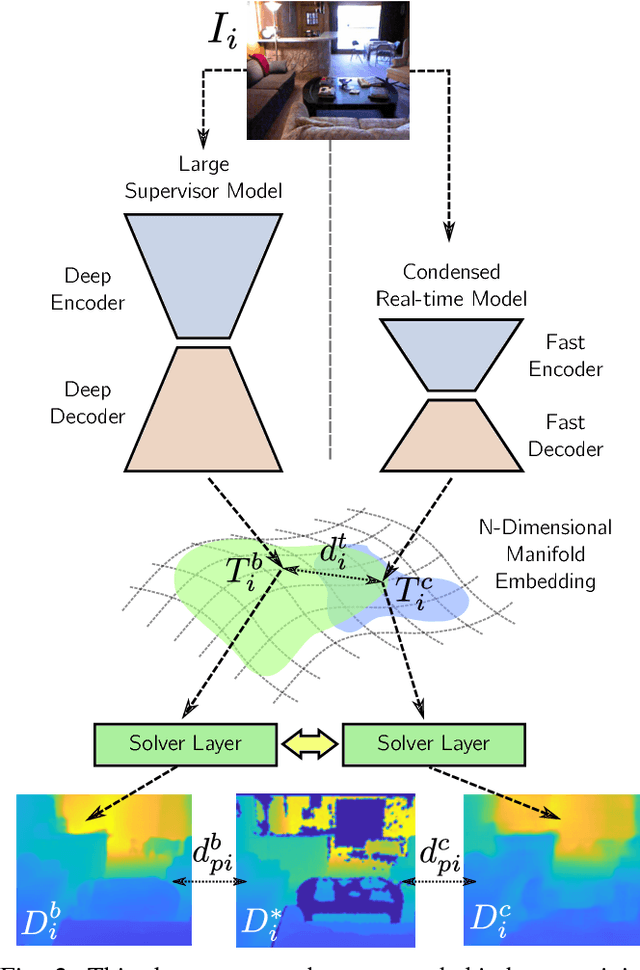

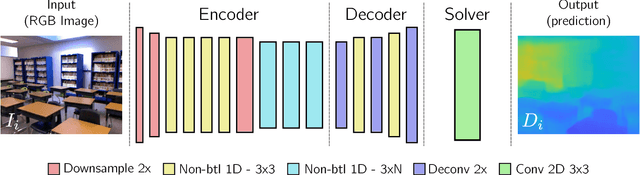

Since the resurgence of CNNs the robotic vision community has developed a range of algorithms that perform classification, semantic segmentation and structure prediction (depths, normals, surface curvature) using neural networks. While some of these models achieve state-of-the art results and super human level performance, deploying these models in a time critical robotic environment remains an ongoing challenge. Real-time frameworks are of paramount importance to build a robotic society where humans and robots integrate seamlessly. To this end, we present a novel real-time structure prediction framework that predicts depth at 30fps on an NVIDIA-TX2. At the time of writing, this is the first piece of work to showcase such a capability on a mobile platform. We also demonstrate with extensive experiments that neural networks with very large model capacities can be leveraged in order to train accurate condensed model architectures in a "from teacher to student" style knowledge transfer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge