CPFed: Communication-Efficient and Privacy-Preserving Federated Learning

Paper and Code

Mar 30, 2020

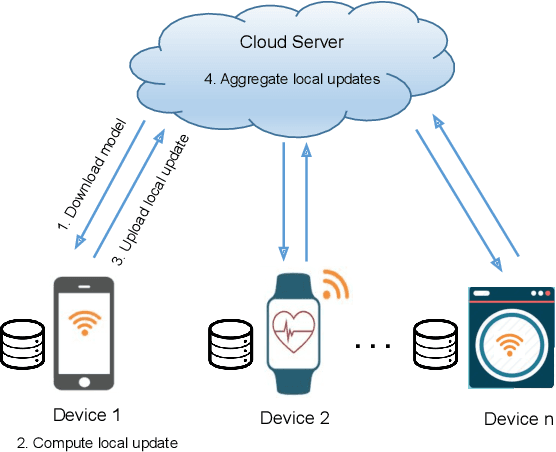

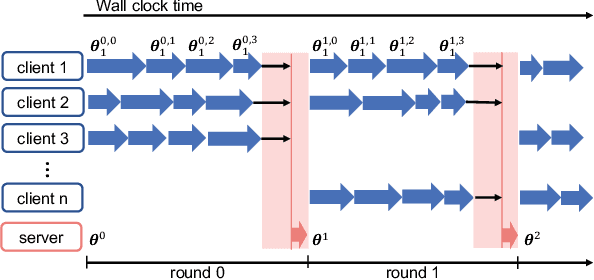

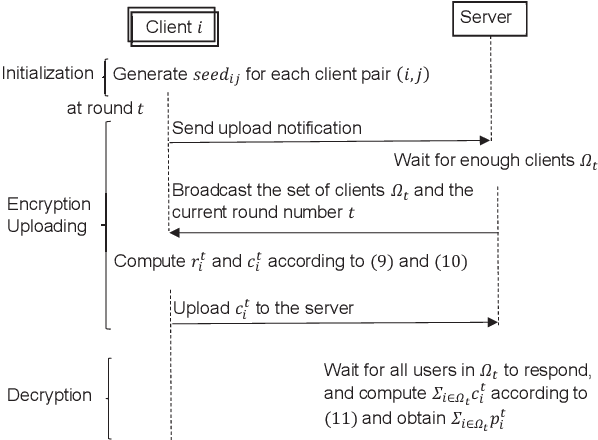

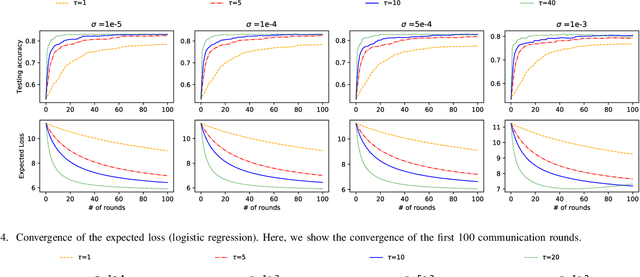

Federated learning is a machine learning setting where a set of edge devices iteratively train a model under the orchestration of a central server, while keeping all data locally on edge devices. In each iteration of federated learning, edge devices perform computation with their local data, and the local computation results are then uploaded to the server for model update. During this process, the challenges of privacy leakage and communication overhead arise due to the extensive information exchange between edge devices and the server. In this paper, we develop CPFed, a communication-efficient and privacy-preserving federated learning method, to solve the above challenges. CPFed integrates three key components: (1) periodic averaging where local computation results at edge devices are only periodically averaged at the server; (2) Gaussian mechanism where edge devices randomly perturb their local computation results before sending the results to the server; and (3) secure aggregation where the perturbed local computation results are homomorphically encrypted before being sent to the server. CPFed can address both the communication efficiency and privacy leakage challenges in federated learning while achieving high model accuracy. We provide an end-to-end privacy guarantee of CPFed and analyze its theoretical convergence rates for both convex and non-convex models. Through extensive numerical experiments on real-world datasets, we demonstrate the effectiveness and efficiency of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge