Coupling weak and strong supervision for classification of prostate cancer histopathology images

Paper and Code

Nov 16, 2018

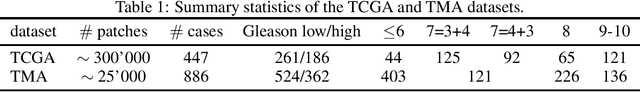

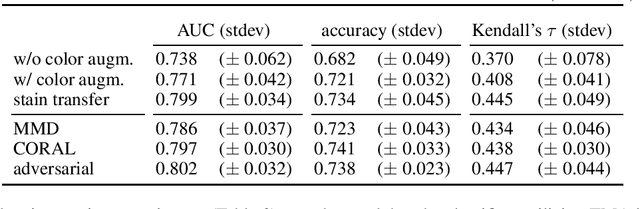

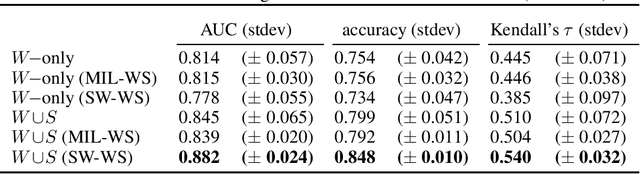

Automated grading of prostate cancer histopathology images is a challenging task, with one key challenge being the scarcity of annotations down to the level of regions of interest (strong labels), as typically the prostate cancer Gleason score is known only for entire tissue slides (weak labels). In this study, we focus on automated Gleason score assignment of prostate cancer whole-slide images on the basis of a large weakly-labeled dataset and a smaller strongly-labeled one. We efficiently leverage information from both label sources by jointly training a classifier on the two datasets and by introducing a gradient update scheme that assigns different relative importances to each training example, as a means of self-controlling the weak supervision signal. Our approach achieves superior performance when compared with standard Gleason scoring methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge