Counterfactual Supervision-based Information Bottleneck for Out-of-Distribution Generalization

Paper and Code

Aug 16, 2022

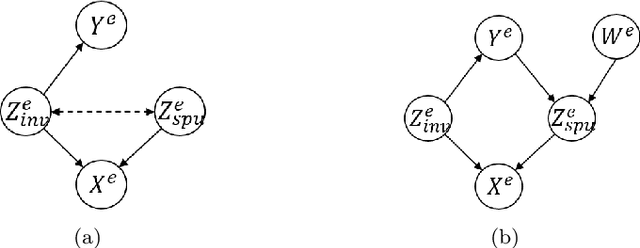

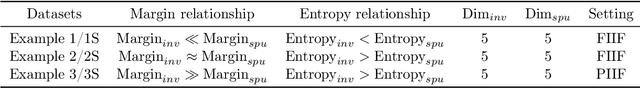

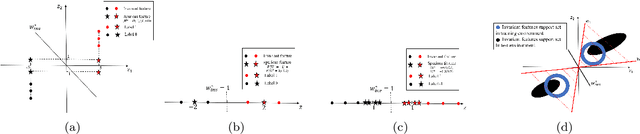

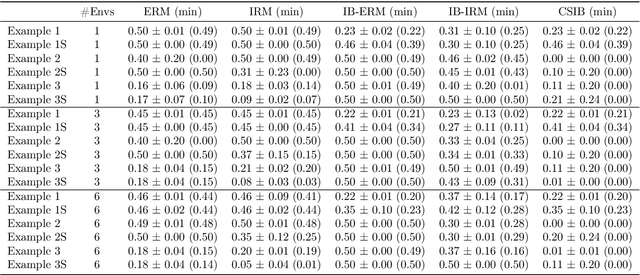

Learning invariant (causal) features for out-of-distribution (OOD) generalization has attracted extensive attention recently, and among the proposals invariant risk minimization (IRM) (Arjovsky et al., 2019) is a notable solution. In spite of its theoretical promise for linear regression, the challenges of using IRM in linear classification problems yet remain (Rosenfeld et al.,2020, Nagarajan et al., 2021). Along this line, a recent study (Arjovsky et al., 2019) has made a first step and proposes a learning principle of information bottleneck based invariant risk minimization (IB-IRM). In this paper, we first show that the key assumption of support overlap of invariant features used in (Arjovsky et al., 2019) is rather strong for the guarantee of OOD generalization and it is still possible to achieve the optimal solution without such assumption. To further answer the question of whether IB-IRM is sufficient for learning invariant features in linear classification problems, we show that IB-IRM would still fail in two cases whether or not the invariant features capture all information about the label. To address such failures, we propose a \textit{Counterfactual Supervision-based Information Bottleneck (CSIB)} learning algorithm that provably recovers the invariant features. The proposed algorithm works even when accessing data from a single environment, and has theoretically consistent results for both binary and multi-class problems. We present empirical experiments on three synthetic datasets that verify the efficacy of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge