Convolutional Neural Networks Demystified: A Matched Filtering Perspective Based Tutorial

Paper and Code

Aug 26, 2021

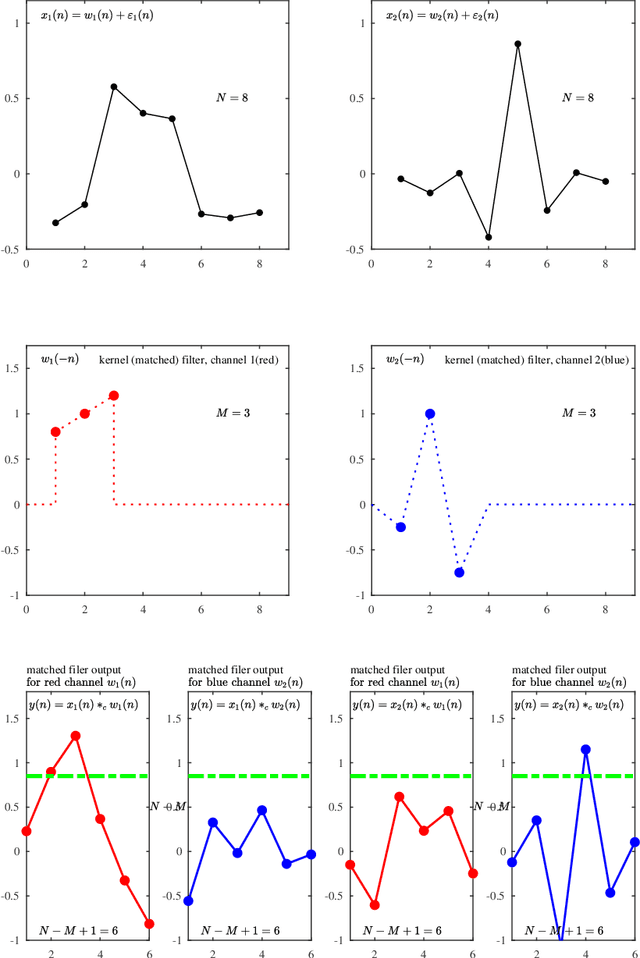

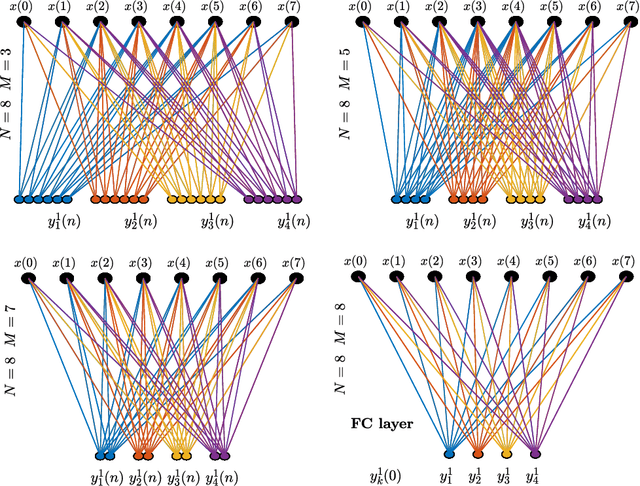

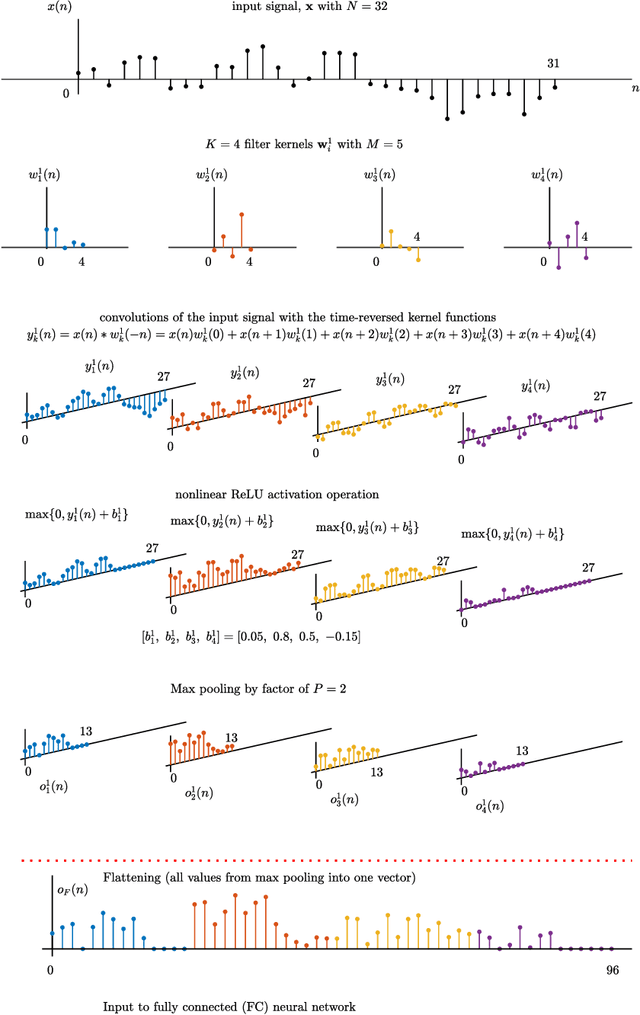

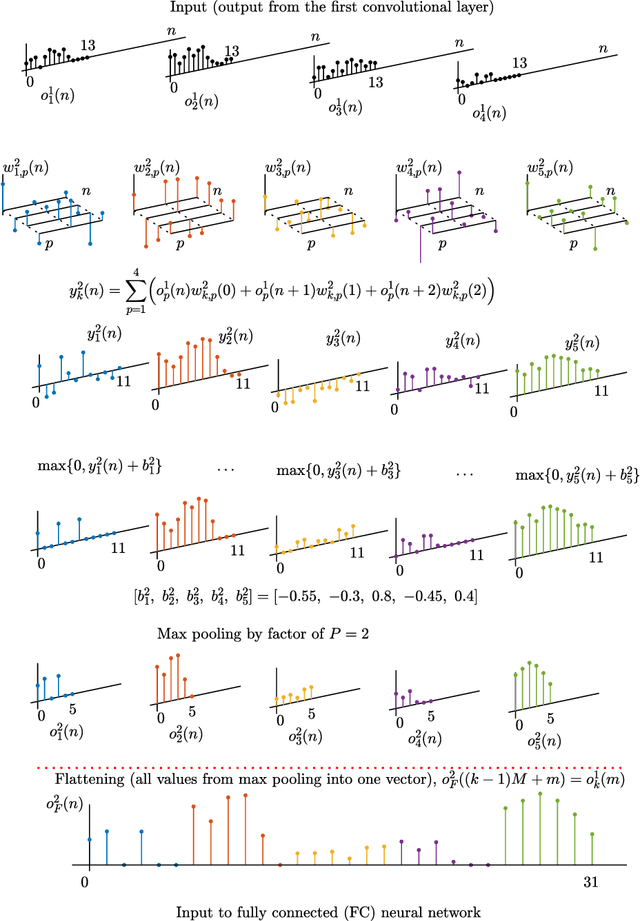

Deep Neural Networks (DNN) and especially Convolutional Neural Networks (CNN) are a de-facto standard for the analysis of large volumes of signals and images. Yet, their development and underlying principles have been largely performed in an ad-hoc and black box fashion. To help demystify CNNs, we revisit their operation from first principles and a matched filtering perspective. We establish that the convolution operation within CNNs, their very backbone, represents a matched filter which examines the input signal/image for the presence of pre-defined features. This perspective is shown to be physically meaningful, and serves as a basis for a step-by-step tutorial on the operation of CNNs, including pooling, zero padding, various ways of dimensionality reduction. Starting from first principles, both the feed-forward pass and the learning stage (via back-propagation) are illuminated in detail, both through a worked-out numerical example and the corresponding visualizations. It is our hope that this tutorial will help shed new light and physical intuition into the understanding and further development of deep neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge