Convolutional neural network for breathing phase detection in lung sounds

Paper and Code

Mar 25, 2019

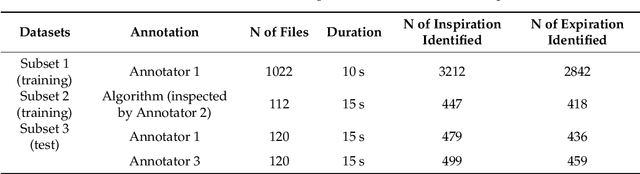

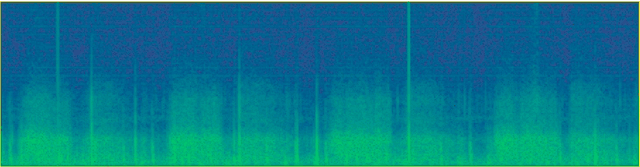

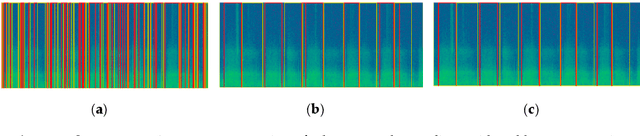

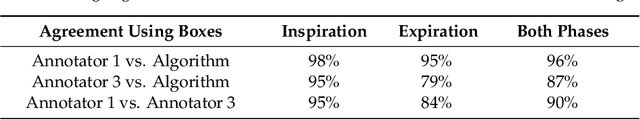

We applied deep learning to create an algorithm for breathing phase detection in lung sound recordings, and we compared the breathing phases detected by the algorithm and manually annotated by two experienced lung sound researchers. Our algorithm uses a convolutional neural network with spectrograms as the features, removing the need to specify features explicitly. We trained and evaluated the algorithm using three subsets that are larger than previously seen in the literature. We evaluated the performance of the method using two methods. First, discrete count of agreed breathing phases (using 50% overlap between a pair of boxes), shows a mean agreement with lung sound experts of 97% for inspiration and 87% for expiration. Second, the fraction of time of agreement (in seconds) gives higher pseudo-kappa values for inspiration (0.73-0.88) than expiration (0.63-0.84), showing an average sensitivity of 97% and an average specificity of 84%. With both evaluation methods, the agreement between the annotators and the algorithm shows human level performance for the algorithm. The developed algorithm is valid for detecting breathing phases in lung sound recordings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge