Contrastive Spatial Reasoning on Multi-View Line Drawings

Paper and Code

Apr 27, 2021

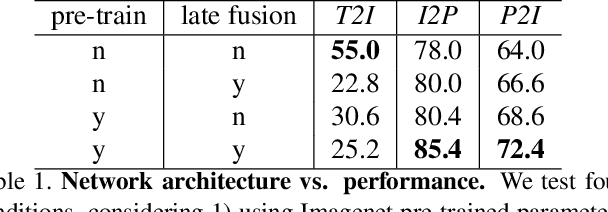

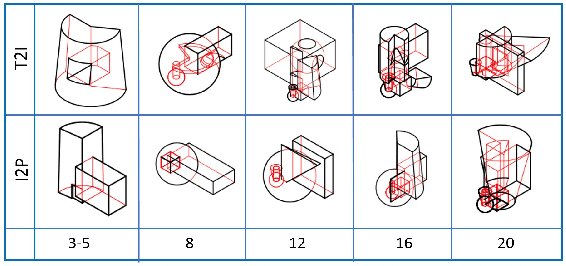

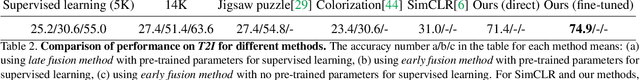

Spatial reasoning on multi-view line drawings by state-of-the-art supervised deep networks is recently shown with puzzling low performances on the SPARE3D dataset. To study the reason behind the low performance and to further our understandings of these tasks, we design controlled experiments on both input data and network designs. Guided by the hindsight from these experiment results, we propose a simple contrastive learning approach along with other network modifications to improve the baseline performance. Our approach uses a self-supervised binary classification network to compare the line drawing differences between various views of any two similar 3D objects. It enables deep networks to effectively learn detail-sensitive yet view-invariant line drawing representations of 3D objects. Experiments show that our method could significantly increase the baseline performance in SPARE3D, while some popular self-supervised learning methods cannot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge