Contradistinguisher: A Vapnik's Imperative to Unsupervised Domain Adaptation

Paper and Code

Jun 10, 2020

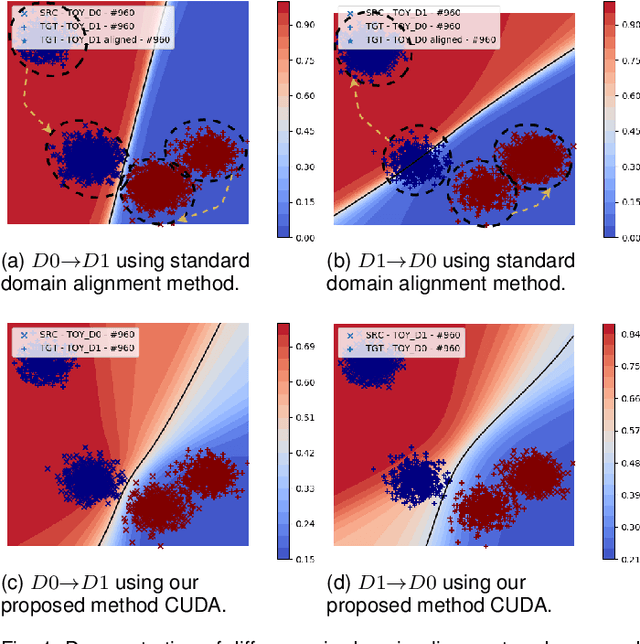

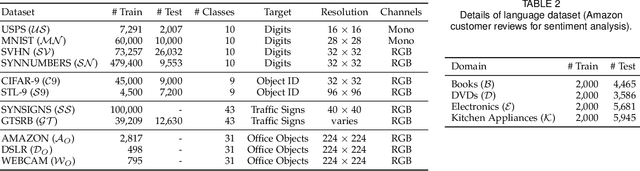

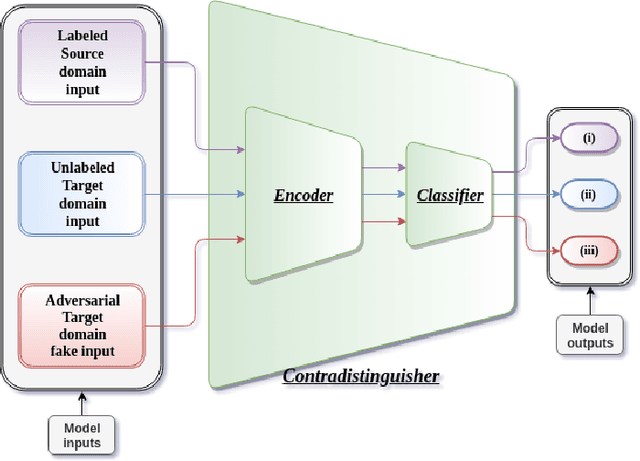

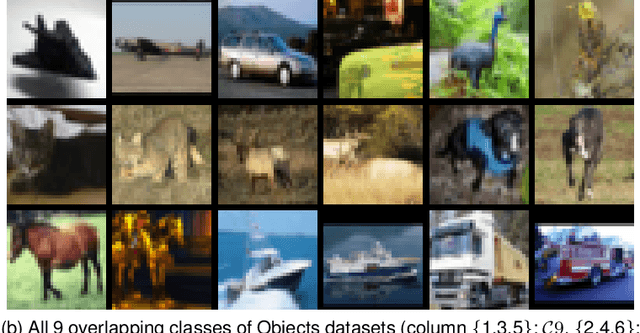

A complex combination of simultaneous supervised-unsupervised learning is believed to be the key to humans performing tasks seamlessly across multiple domains or tasks. This phenomenon of cross-domain learning has been very well studied in domain adaptation literature. Recent domain adaptation works rely on an indirect way of first aligning the source and target domain distributions and then train a classifier on the labeled source domain to classify the target domain. However, this approach has the main drawback that obtaining a near-perfect alignment of the domains in itself might be difficult/impossible (e.g., language domains). To address this, we follow Vapnik's imperative of statistical learning that states any desired problem should be solved in the most direct way rather than solving a more general intermediate task and propose a direct approach to domain adaptation that does not require domain alignment. We propose a model referred Contradistinguisher that learns contrastive features and whose objective is to jointly learn to contradistinguish the unlabeled target domain in an unsupervised way and classify in a supervised way on the source domain. We achieve the state-of-the-art on Office-31 and VisDA-2017 datasets in both single-source and multi-source settings. We also demonstrate that the contradistinguish loss improves the model performance by increasing the shape bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge