Continuous Decoding of Daily-Life Hand Movements from Forearm Muscle Activity for Enhanced Myoelectric Control of Hand Prostheses

Paper and Code

Apr 29, 2021

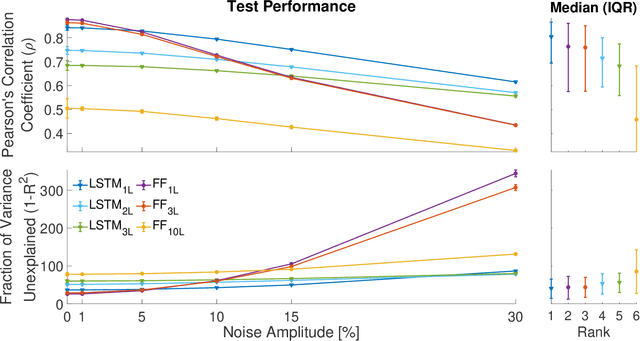

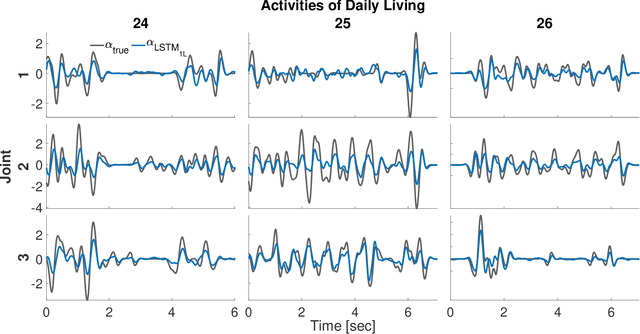

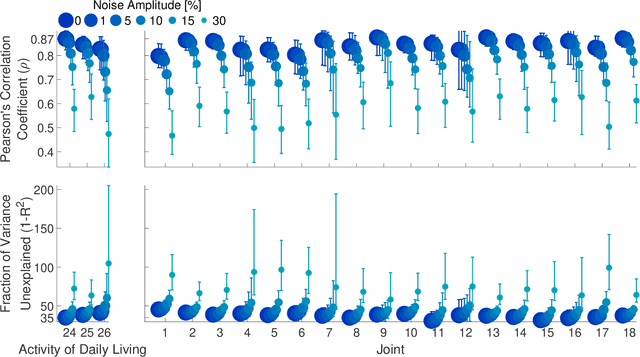

State-of-the-art motorized hand prostheses are endowed with actuators able to provide independent and proportional control of as many as six degrees of freedom (DOFs). The control signals are derived from residual electromyographic (EMG) activity, recorded concurrently from relevant forearm muscles. Nevertheless, the functional mapping between forearm EMG activity and hand kinematics is only known with limited accuracy. Therefore, no robust method exists for the reliable computation of control signals for the independent and proportional actuation of more than two DOFs. A common approach to deal with this limitation is to pre-program the prostheses for the execution of a restricted number of behaviors (e.g., pinching, grasping, and wrist rotation) that are activated by the detection of specific EMG activation patterns. However, this approach severely limits the range of activities users can perform with the prostheses during their daily living. In this work, we introduce a novel method, based on a long short-term memory (LSTM) network, to continuously map forearm EMG activity onto hand kinematics. Critically, unlike previous work, which often focuses on simple and highly controlled motor tasks, we tested our method on a dataset of activities of daily living (ADLs): the KIN-MUS UJI dataset. To the best of our knowledge, ours is the first reported work on the prediction of hand kinematics that uses this challenging dataset. Remarkably, we show that our network is able to generalize to novel untrained ADLs. Our results suggest that the presented method is suitable for the generation of control signals for the independent and proportional actuation of the multiple DOFs of state-of-the-art hand prostheses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge