Contextualized Query Embeddings for Conversational Search

Paper and Code

Apr 18, 2021

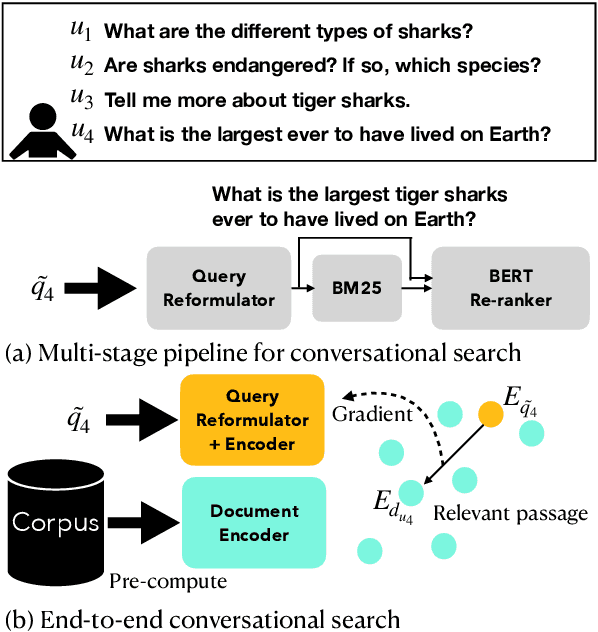

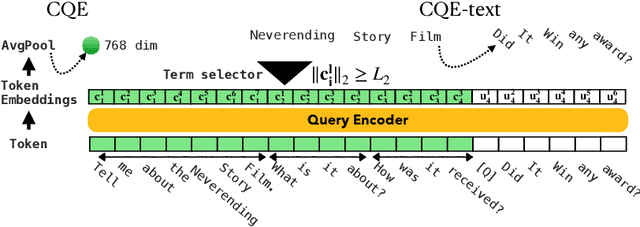

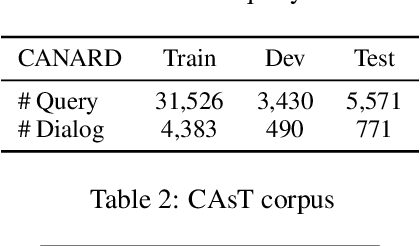

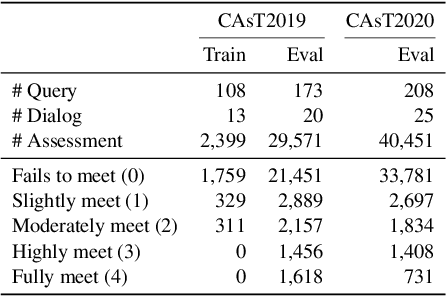

Conversational search (CS) plays a vital role in information retrieval. The current state of the art approaches the task using a multi-stage pipeline comprising conversational query reformulation and information seeking modules. Despite of its effectiveness, such a pipeline often comprises multiple neural models and thus requires long inference times. In addition, independently optimizing the effectiveness of each module does not consider the relation between modules in the pipeline. Thus, in this paper, we propose a single-stage design, which supports end-to-end training and low-latency inference. To aid in this goal, we create a synthetic dataset for CS to overcome the lack of training data and explore different training strategies using this dataset. Experiments demonstrate that our model yields competitive retrieval effectiveness against state-of-the-art multi-stage approaches but with lower latency. Furthermore, we show that improved retrieval effectiveness benefits the downstream task of conversational question answering.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge