Context-modulation of hippocampal dynamics and deep convolutional networks

Paper and Code

Nov 27, 2017

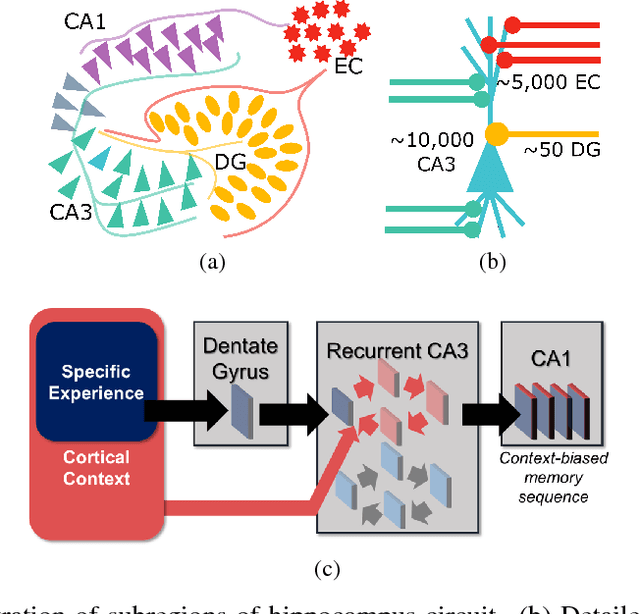

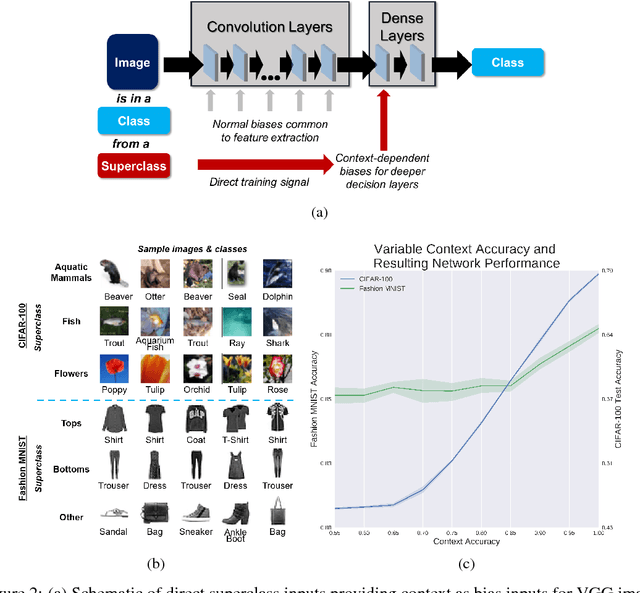

Complex architectures of biological neural circuits, such as parallel processing pathways, has been behaviorally implicated in many cognitive studies. However, the theoretical consequences of circuit complexity on neural computation have only been explored in limited cases. Here, we introduce a mechanism by which direct and indirect pathways from cortex to the CA3 region of the hippocampus can balance both contextual gating of memory formation and driving network activity. We implement this concept in a deep artificial neural network by enabling a context-sensitive bias. The motivation for this is to improve performance of a size-constrained network. Using direct knowledge of the superclass information in the CIFAR-100 and Fashion-MNIST datasets, we show a dramatic increase in performance without an increase in network size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge