Context-based Image Segment Labeling (CBISL)

Paper and Code

Nov 02, 2020

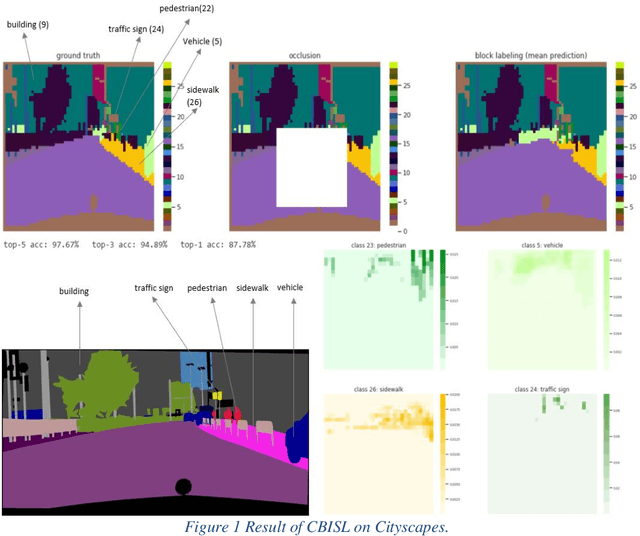

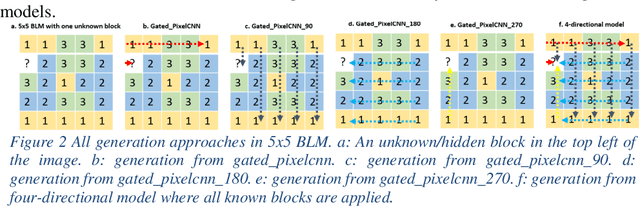

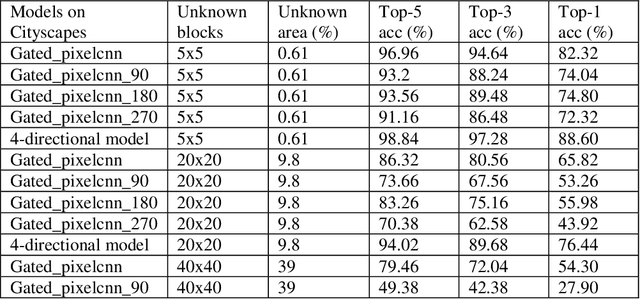

Working with images, one often faces problems with incomplete or unclear information. Image inpainting can be used to restore missing image regions but focuses, however, on low-level image features such as pixel intensity, pixel gradient orientation, and color. This paper aims to recover semantic image features (objects and positions) in images. Based on published gated PixelCNNs, we demonstrate a new approach referred to as quadro-directional PixelCNN to recover missing objects and return probable positions for objects based on the context. We call this approach context-based image segment labeling (CBISL). The results suggest that our four-directional model outperforms one-directional models (gated PixelCNN) and returns a human-comparable performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge