Context Aware Robot Navigation using Interactively Built Semantic Maps

Paper and Code

Aug 14, 2018

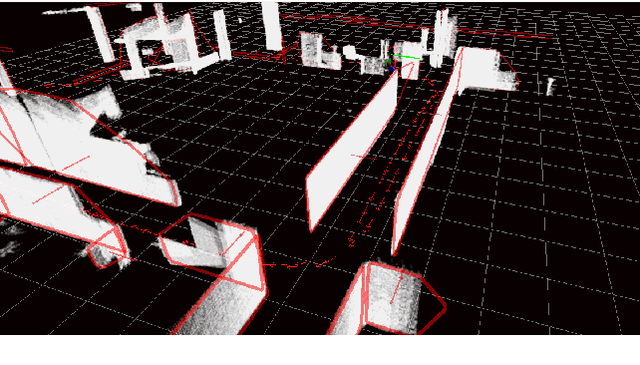

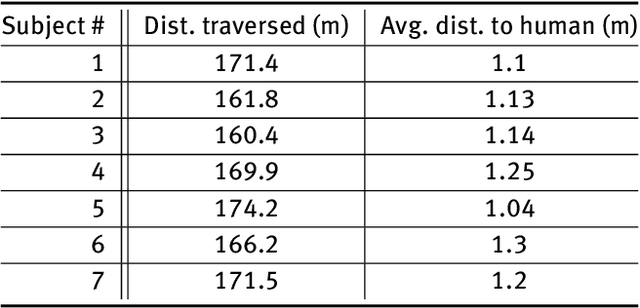

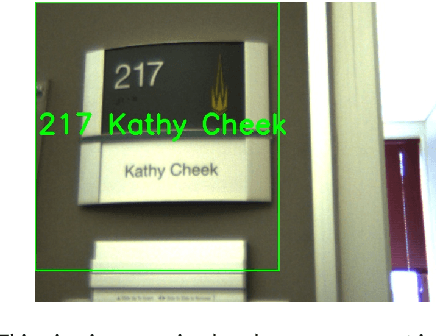

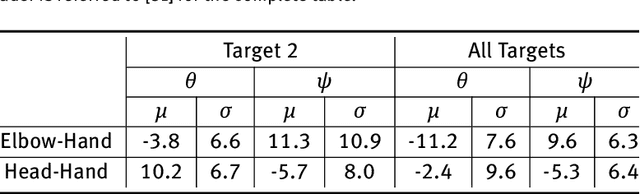

We discuss the process of building semantic maps, how to interactively label entities in them, and how to use them to enable context-aware navigation behaviors in human environments. We utilize planar surfaces, such as walls and tables, and static objects, such as door signs, as features for our semantic mapping approach. Users can interactively annotate these features by having the robot follow him/her, entering the label through a mobile app, and performing a pointing gesture toward the landmark of interest. Our gesture based approach can reliably estimate which object is being pointed at and detect ambiguous gestures with probabilistic modeling. Our person following method attempts to maximize future utility by a search for future actions assuming constant velocity model for the human. We describe a method to extract metric goals from a semantic map landmark and to plan a human aware path that takes into account the personal spaces of people. Finally, we demonstrate context-awareness for person following in two scenarios: interactive labeling and door passing. We believe that future navigation approaches and service robotics applications can be made more effective by further exploiting the structure of human environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge