Context-Aware Attention for Understanding Twitter Abuse

Paper and Code

Sep 24, 2018

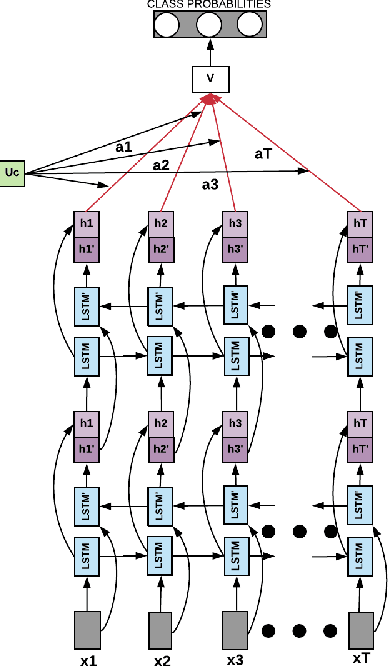

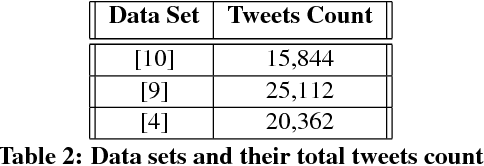

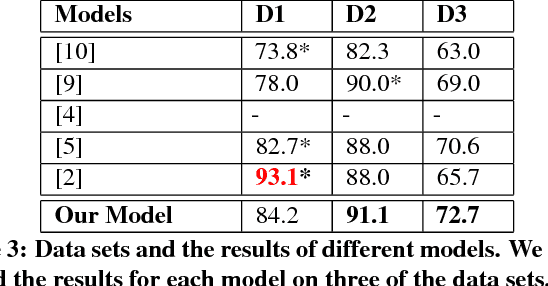

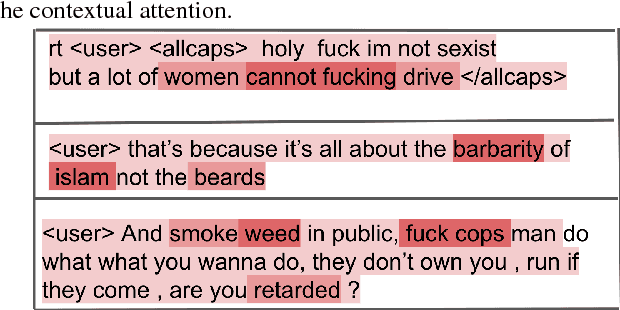

The original goal of any social media platform is to facilitate users to indulge in healthy and meaningful conversations. But more often than not, it has been found that it becomes an avenue for wanton attacks. We want to alleviate this issue and hence we try to provide a detailed analysis of how abusive behavior can be monitored in Twitter. The complexity of the natural language constructs makes this task challenging. We show how applying contextual attention to Long Short Term Memory networks help us give near state of art results on multiple benchmarks abuse detection data sets from Twitter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge