Constructing Stronger and Faster Baselines for Skeleton-based Action Recognition

Paper and Code

Jun 29, 2021

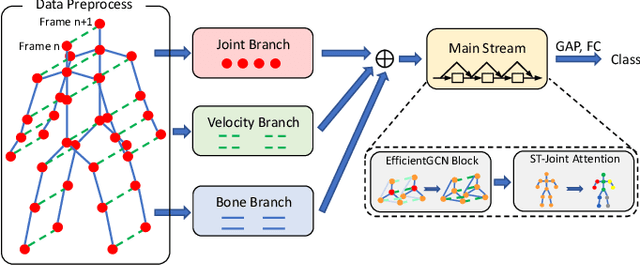

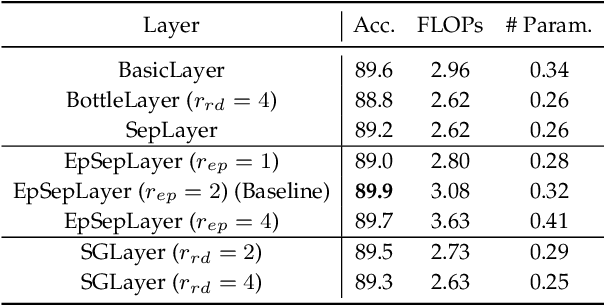

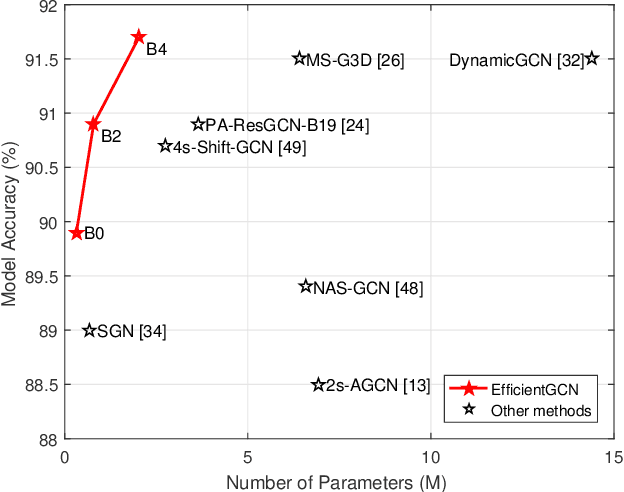

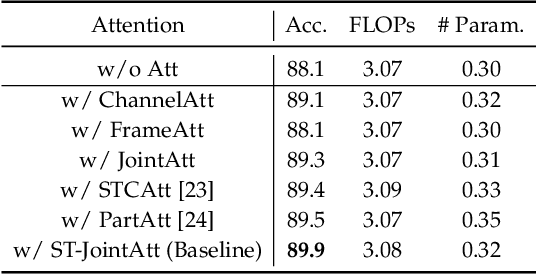

One essential problem in skeleton-based action recognition is how to extract discriminative features over all skeleton joints. However, the complexity of the recent State-Of-The-Art (SOTA) models for this task tends to be exceedingly sophisticated and over-parameterized. The low efficiency in model training and inference has increased the validation costs of model architectures in large-scale datasets. To address the above issue, recent advanced separable convolutional layers are embedded into an early fused Multiple Input Branches (MIB) network, constructing an efficient Graph Convolutional Network (GCN) baseline for skeleton-based action recognition. In addition, based on such the baseline, we design a compound scaling strategy to expand the model's width and depth synchronously, and eventually obtain a family of efficient GCN baselines with high accuracies and small amounts of trainable parameters, termed EfficientGCN-Bx, where ''x'' denotes the scaling coefficient. On two large-scale datasets, i.e., NTU RGB+D 60 and 120, the proposed EfficientGCN-B4 baseline outperforms other SOTA methods, e.g., achieving 91.7% accuracy on the cross-subject benchmark of NTU 60 dataset, while being 3.15x smaller and 3.21x faster than MS-G3D, which is one of the best SOTA methods. The source code in PyTorch version and the pretrained models are available at https://github.com/yfsong0709/EfficientGCNv1.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge