Connecting Lyapunov Control Theory to Adversarial Attacks

Paper and Code

Jul 17, 2019

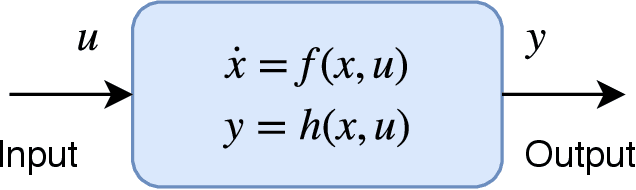

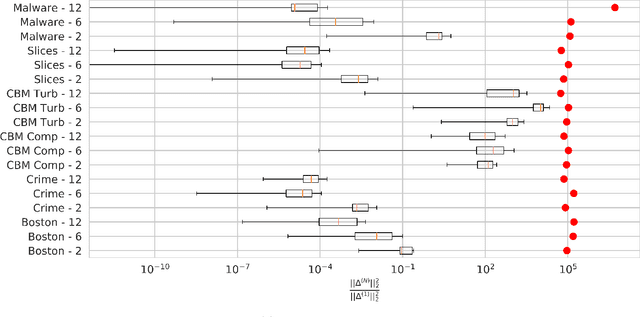

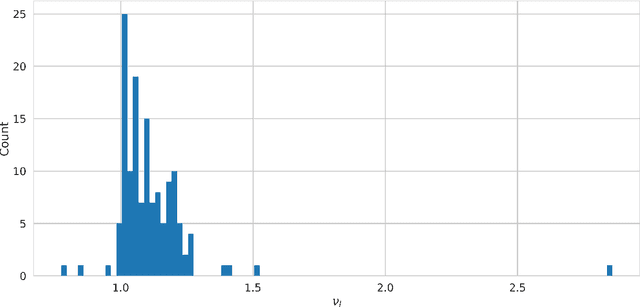

Significant work is being done to develop the math and tools necessary to build provable defenses, or at least bounds, against adversarial attacks of neural networks. In this work, we argue that tools from control theory could be leveraged to aid in defending against such attacks. We do this by example, building a provable defense against a weaker adversary. This is done so we can focus on the mechanisms of control theory, and illuminate its intrinsic value.

* 8 pages, 3 figures, AdvML'19: Workshop on Adversarial Learning

Methods for Machine Learning and Data Mining at KDD

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge