Confidence Scoring Using Whitebox Meta-models with Linear Classifier Probes

Paper and Code

May 14, 2018

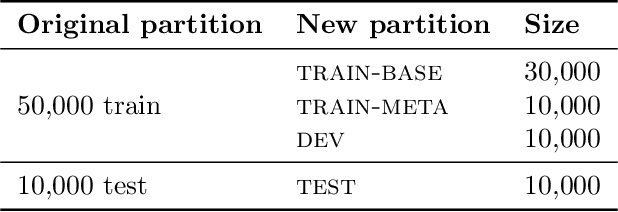

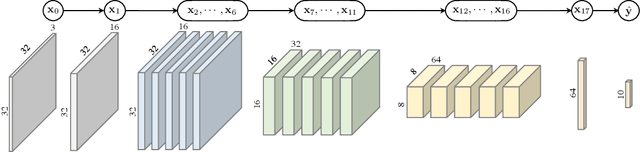

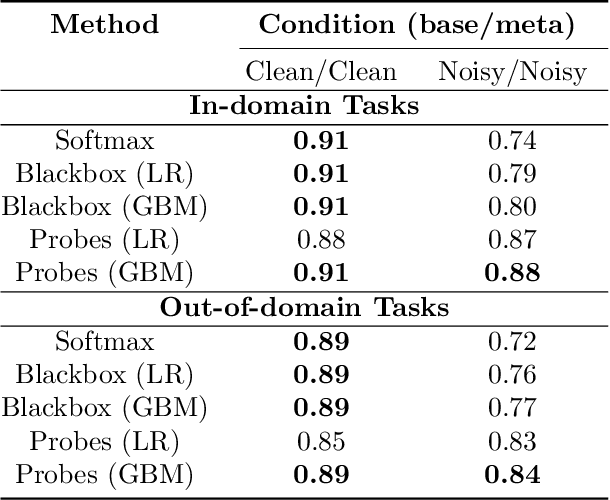

We propose a confidence scoring mechanism for multi-layer neural networks based on a paradigm of a base model and a meta-model. The confidence score is learned by the meta-model using features derived from the base model -- a deep multi-layer neural network -- considered a whitebox. As features, we investigate linear classifier probes inserted between the various layers of the base model and trained using each layer's intermediate activations. Experiments show that this approach outperforms various baselines in a filtering task, i.e., task of rejecting samples with low confidence. Experimental results are presented using CIFAR-10 and CIFAR-100 dataset with and without added noise exploring various aspects of the method.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge