CondNet: Conditional Classifier for Scene Segmentation

Paper and Code

Sep 21, 2021

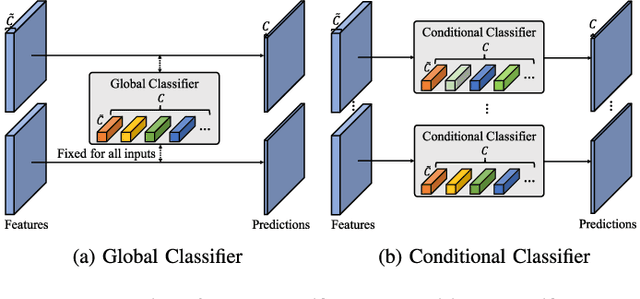

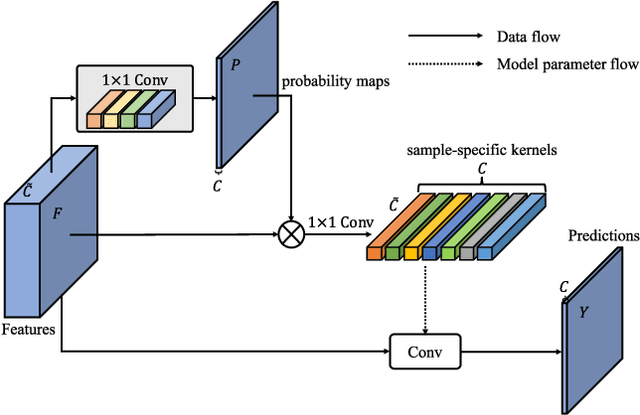

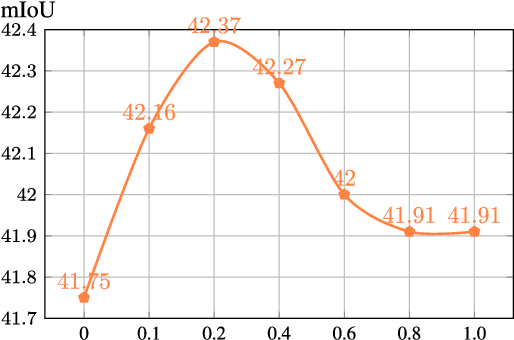

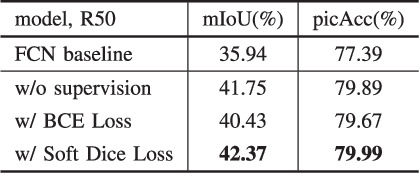

The fully convolutional network (FCN) has achieved tremendous success in dense visual recognition tasks, such as scene segmentation. The last layer of FCN is typically a global classifier (1x1 convolution) to recognize each pixel to a semantic label. We empirically show that this global classifier, ignoring the intra-class distinction, may lead to sub-optimal results. In this work, we present a conditional classifier to replace the traditional global classifier, where the kernels of the classifier are generated dynamically conditioned on the input. The main advantages of the new classifier consist of: (i) it attends on the intra-class distinction, leading to stronger dense recognition capability; (ii) the conditional classifier is simple and flexible to be integrated into almost arbitrary FCN architectures to improve the prediction. Extensive experiments demonstrate that the proposed classifier performs favourably against the traditional classifier on the FCN architecture. The framework equipped with the conditional classifier (called CondNet) achieves new state-of-the-art performances on two datasets. The code and models are available at https://git.io/CondNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge