Conditional Hallucinations for Image Compression

Paper and Code

Oct 25, 2024

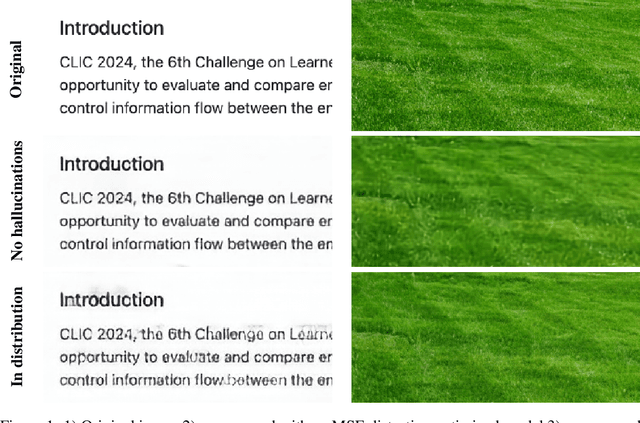

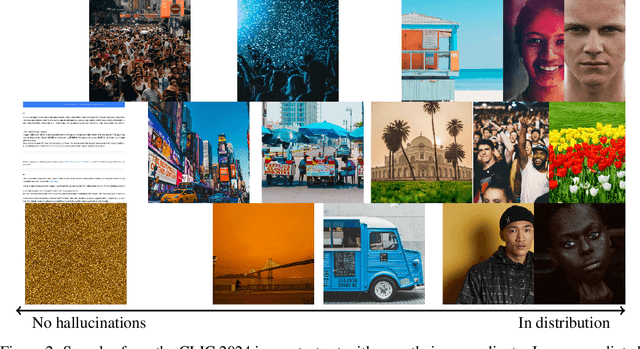

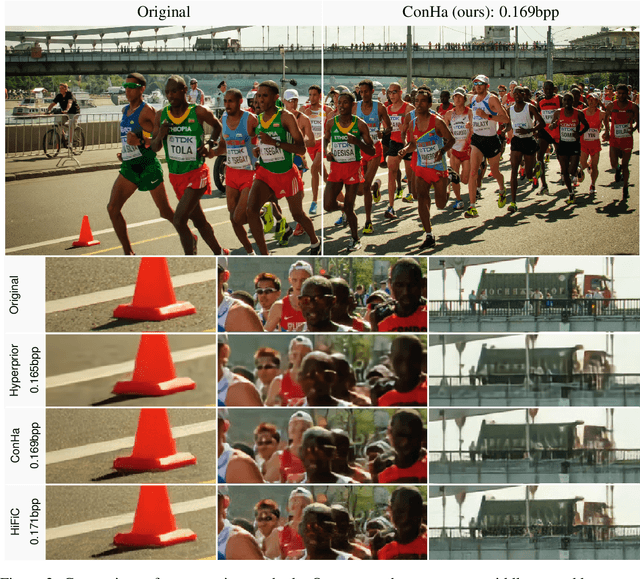

In lossy image compression, models face the challenge of either hallucinating details or generating out-of-distribution samples due to the information bottleneck. This implies that at times, introducing hallucinations is necessary to generate in-distribution samples. The optimal level of hallucination varies depending on image content, as humans are sensitive to small changes that alter the semantic meaning. We propose a novel compression method that dynamically balances the degree of hallucination based on content. We collect data and train a model to predict user preferences on hallucinations. By using this prediction to adjust the perceptual weight in the reconstruction loss, we develop a Conditionally Hallucinating compression model (ConHa) that outperforms state-of-the-art image compression methods. Code and images are available at https://polybox.ethz.ch/index.php/s/owS1k5JYs4KD4TA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge