Concentrated Document Topic Model

Paper and Code

Feb 06, 2021

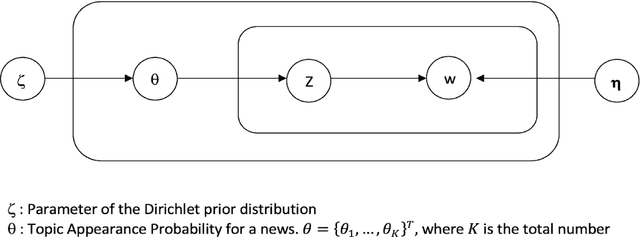

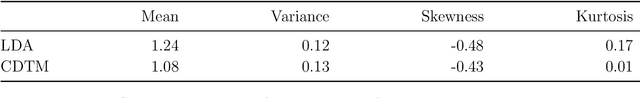

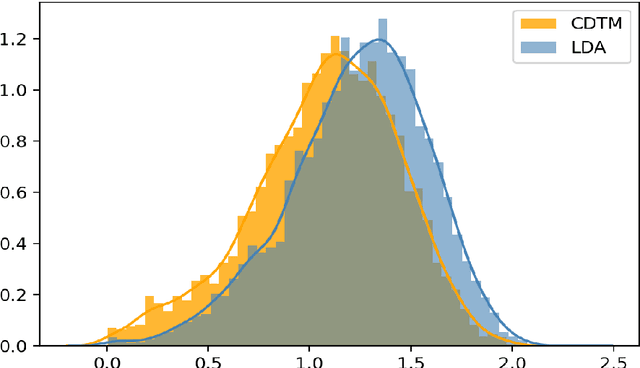

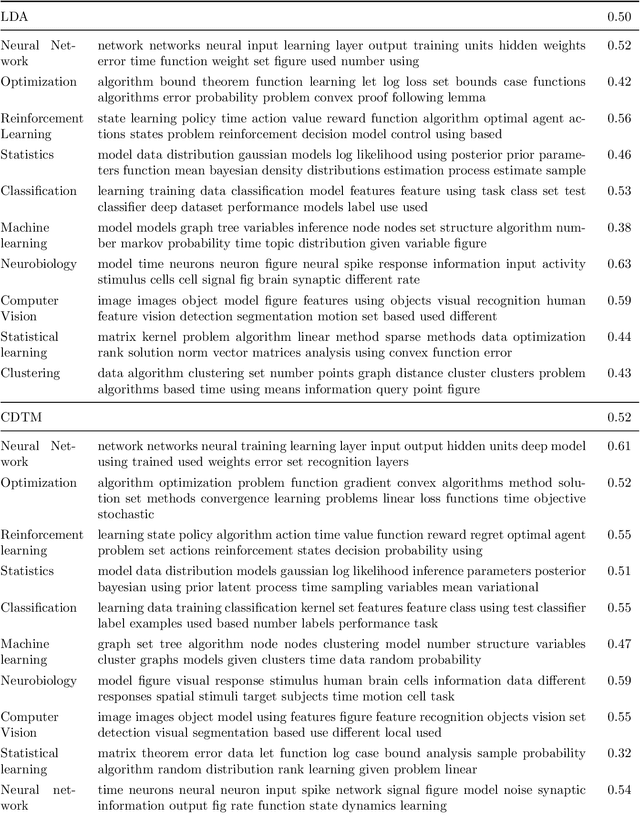

We propose a Concentrated Document Topic Model(CDTM) for unsupervised text classification, which is able to produce a concentrated and sparse document topic distribution. In particular, an exponential entropy penalty is imposed on the document topic distribution. Documents that have diverse topic distributions are penalized more, while those having concentrated topics are penalized less. We apply the model to the benchmark NIPS dataset and observe more coherent topics and more concentrated and sparse document-topic distributions than Latent Dirichlet Allocation(LDA).

* arXiv admin note: text overlap with arXiv:2102.03525

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge