Computational Advantages of Relevance Reasoning in Bayesian Belief Networks

Paper and Code

Feb 06, 2013

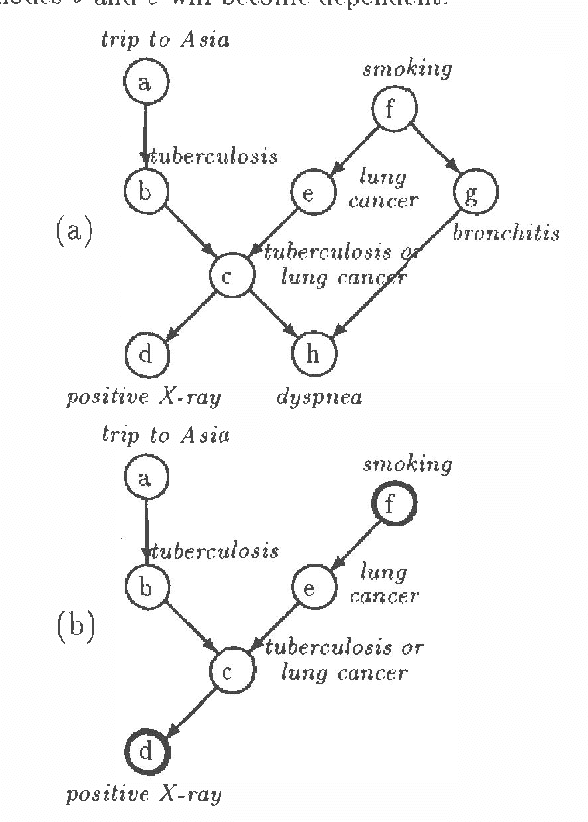

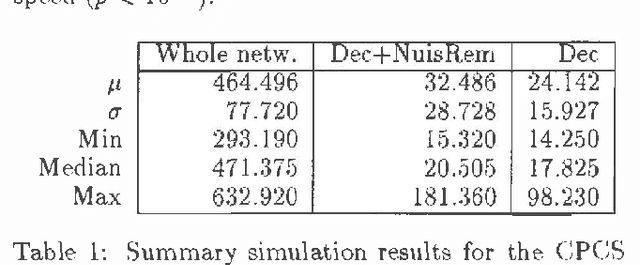

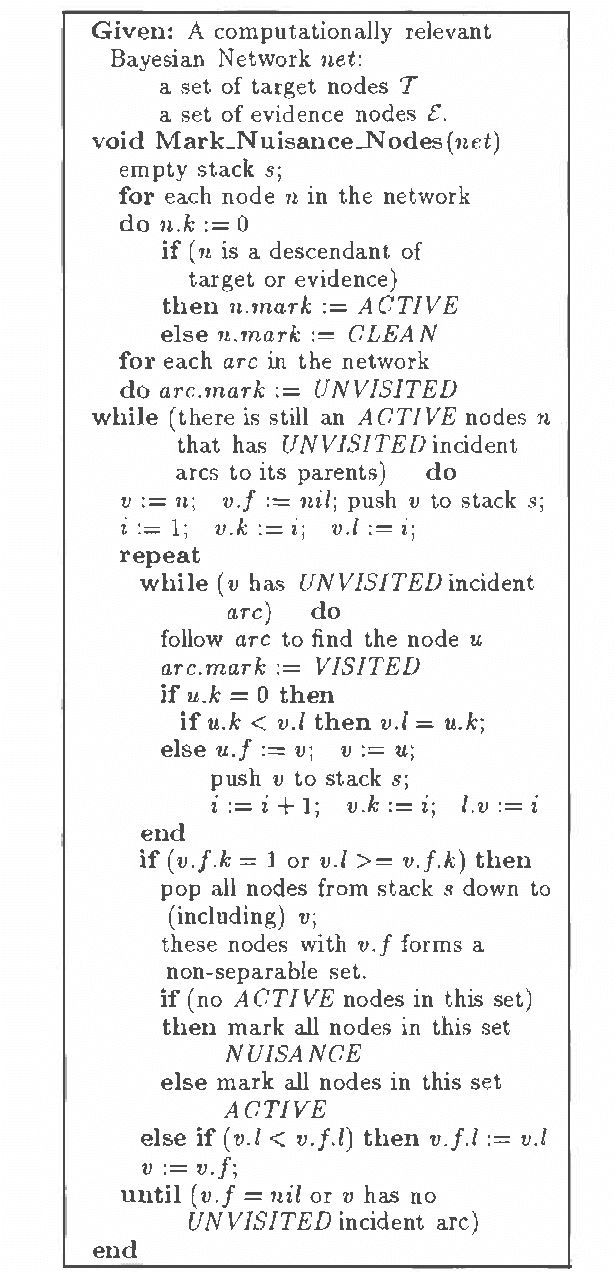

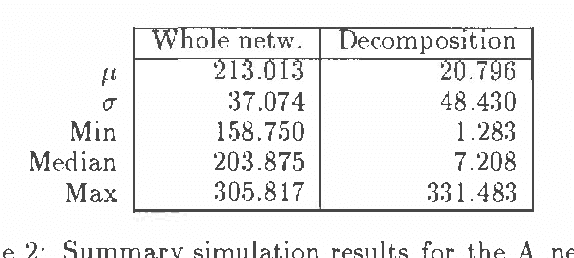

This paper introduces a computational framework for reasoning in Bayesian belief networks that derives significant advantages from focused inference and relevance reasoning. This framework is based on d -separation and other simple and computationally efficient techniques for pruning irrelevant parts of a network. Our main contribution is a technique that we call relevance-based decomposition. Relevance-based decomposition approaches belief updating in large networks by focusing on their parts and decomposing them into partially overlapping subnetworks. This makes reasoning in some intractable networks possible and, in addition, often results in significant speedup, as the total time taken to update all subnetworks is in practice often considerably less than the time taken to update the network as a whole. We report results of empirical tests that demonstrate practical significance of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge