Compositional 3D Scene Generation using Locally Conditioned Diffusion

Paper and Code

Mar 23, 2023

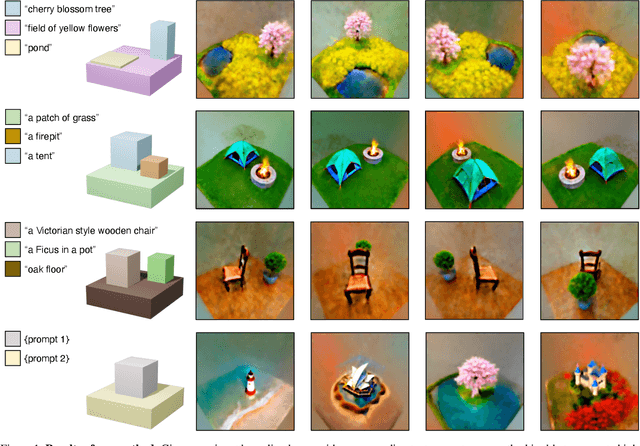

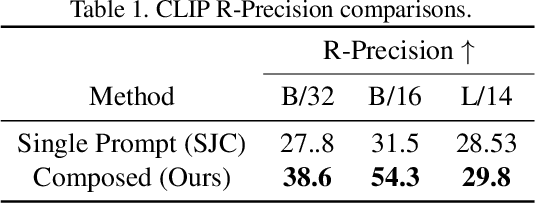

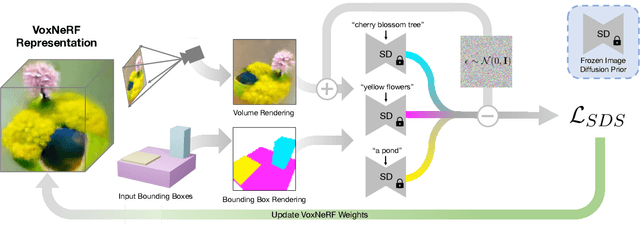

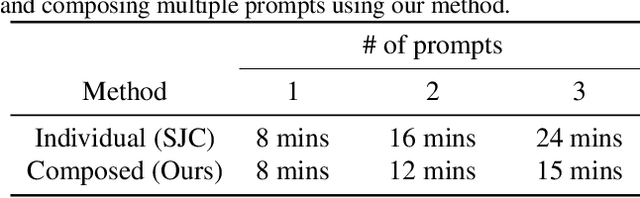

Designing complex 3D scenes has been a tedious, manual process requiring domain expertise. Emerging text-to-3D generative models show great promise for making this task more intuitive, but existing approaches are limited to object-level generation. We introduce \textbf{locally conditioned diffusion} as an approach to compositional scene diffusion, providing control over semantic parts using text prompts and bounding boxes while ensuring seamless transitions between these parts. We demonstrate a score distillation sampling--based text-to-3D synthesis pipeline that enables compositional 3D scene generation at a higher fidelity than relevant baselines.

* For project page, see https://ryanpo.com/comp3d/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge