Comparing Deep Neural Nets with UMAP Tour

Paper and Code

Oct 18, 2021

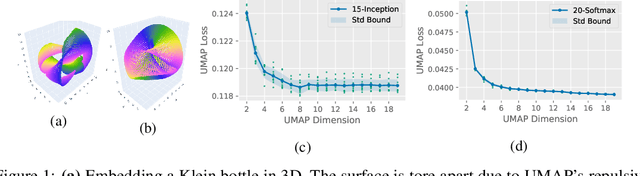

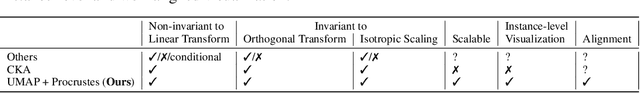

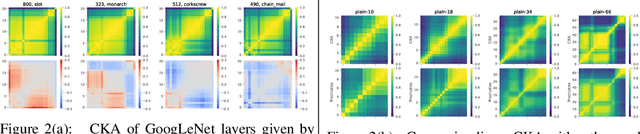

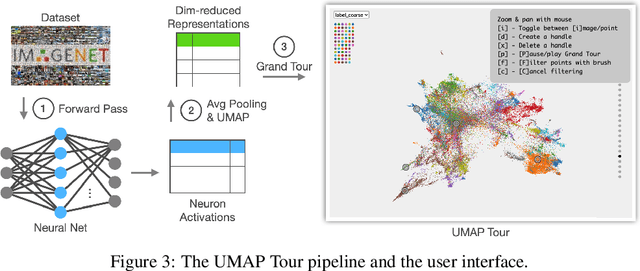

Neural networks should be interpretable to humans. In particular, there is a growing interest in concepts learned in a layer and similarity between layers. In this work, a tool, UMAP Tour, is built to visually inspect and compare internal behavior of real-world neural network models using well-aligned, instance-level representations. The method used in the visualization also implies a new similarity measure between neural network layers. Using the visual tool and the similarity measure, we find concepts learned in state-of-the-art models and dissimilarities between them, such as GoogLeNet and ResNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge