Compact Surjective Encoding Autoencoder for Unsupervised Novelty Detection

Paper and Code

Mar 04, 2020

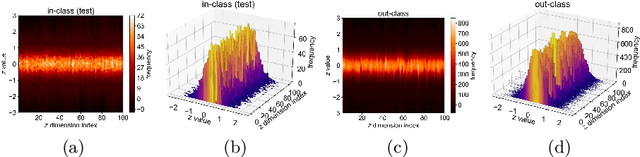

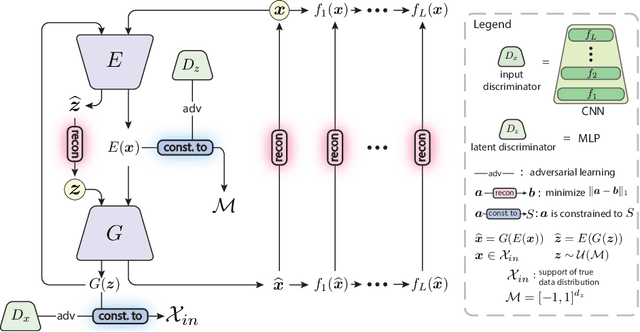

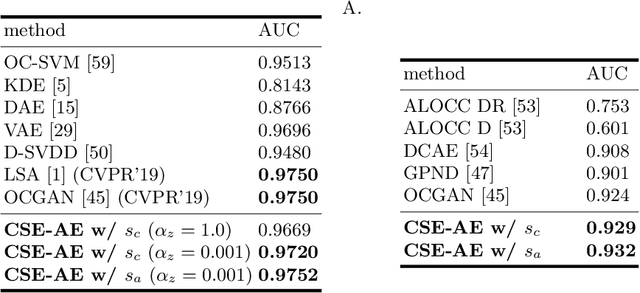

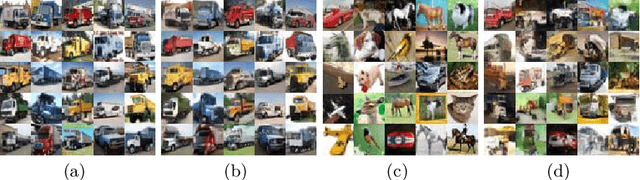

In unsupervised novelty detection, a model is trained solely on the in-class data, and infer to single out out-class data. Autoencoder (AE) variants aim to compactly model the in-class data to reconstruct it exclusively, differentiating it from out-class by the reconstruction error. However, imposing compactness improperly may damage in-class reconstruction and, therefore, detection performance. To solve this, we propose Compact Surjective Encoding AE (CSE-AE). In this model, the encoding of any input is constrained into a compact manifold by exploiting the deep neural net's ignorance of the unknown. Concurrently, the in-class data is surjectively encoded to the compact manifold via AE. The mechanism is realized by both GAN and its ensembled discriminative layers, and results to reconstruct the in-class exclusively. In inference, the reconstruction error of a query is measured using high-level semantics captured by the discriminator. Extensive experiments on image data show that the proposed model gives state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge