Communicating Robot Conventions through Shared Autonomy

Paper and Code

Mar 03, 2022

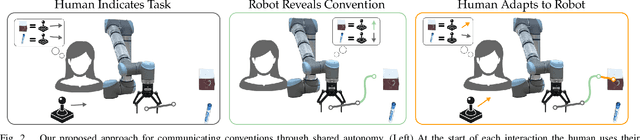

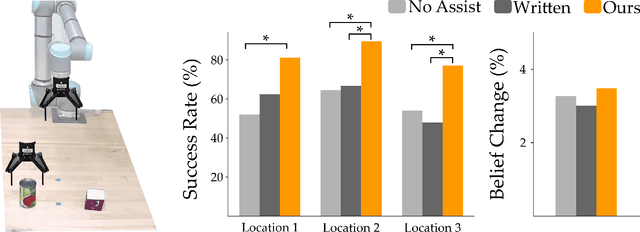

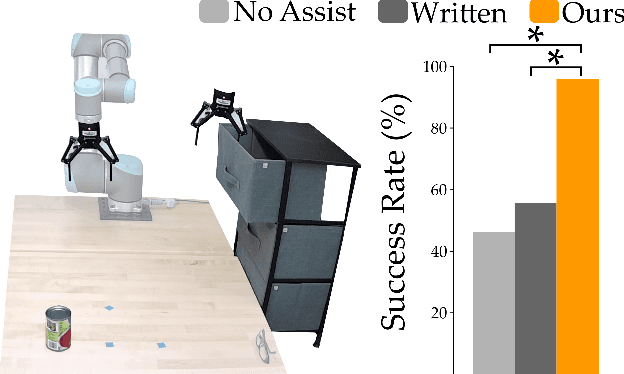

When humans control robot arms these robots often need to infer the human's desired task. Prior research on assistive teleoperation and shared autonomy explores how robots can determine the desired task based on the human's joystick inputs. In order to perform this inference the robot relies on an internal mapping between joystick inputs and discrete tasks: e.g., pressing the joystick left indicates that the human wants a plate, while pressing the joystick right indicates a cup. This approach works well after the human understands how the robot interprets their inputs -- but inexperienced users still have to learn these mappings through trial and error! Here we recognize that the robot's mapping between tasks and inputs is a convention. There are multiple, equally efficient conventions that the robot could use: rather than passively waiting for the human, we introduce a shared autonomy approach where the robot actively reveals its chosen convention. Across repeated interactions the robot intervenes and exaggerates the arm's motion to demonstrate more efficient inputs while also assisting for the current task. We compare this approach to a state-of-the-art baseline -- where users must identify the convention by themselves -- as well as written instructions. Our user study results indicate that modifying the robot's behavior to reveal its convention outperforms the baselines and reduces the amount of time that humans spend controlling the robot. See videos of our user study here: https://youtu.be/jROTVOp469I

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge