Combining band-frequency separation and deep neural networks for optoacoustic imaging

Paper and Code

Oct 14, 2022

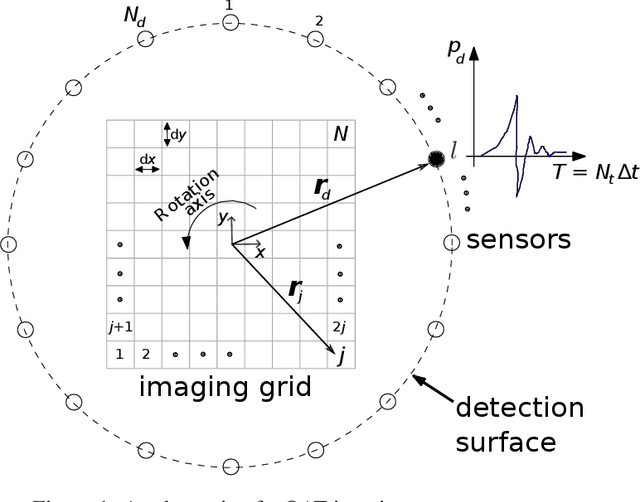

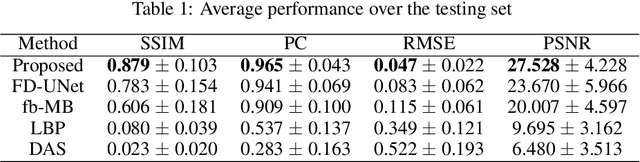

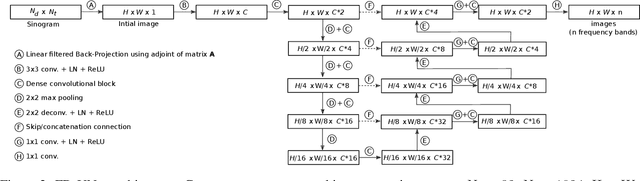

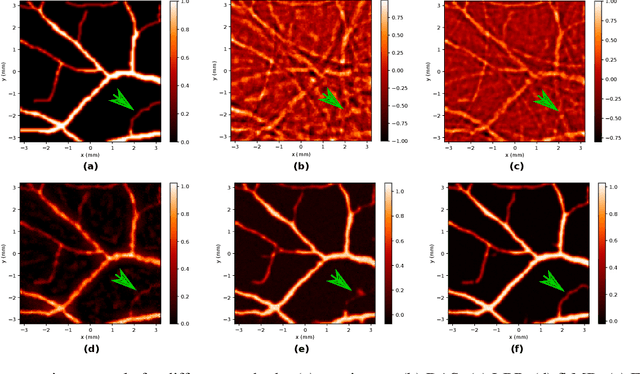

In this paper we consider the problem of image reconstruction in optoacoustic tomography. In particular, we devise a deep neural architecture that can explicitly take into account the band-frequency information contained in the sinogram. This is accomplished by two means. First, we jointly use a linear filtered back-projection method and a fully dense UNet for the generation of the images corresponding to each one of the frequency bands considered in the separation. Secondly, in order to train the model, we introduce a special loss function consisting of three terms: (i) a separating frequency bands term; (ii) a sinogram-based consistency term and (iii) a term that directly measures the quality of image reconstruction and which takes advantage of the presence of ground-truth images present in training dataset. Numerical experiments show that the proposed model, which can be easily trainable by standard optimization methods, presents an excellent generalization performance quantified by a number of metrics commonly used in practice. Also, in the testing phase, our solution has a comparable (in some cases lower) computational complexity, which is a desirable feature for real-time implementation of optoacoustic imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge