Collaborative Inference for AI-Empowered IoT Devices

Paper and Code

Jul 24, 2022

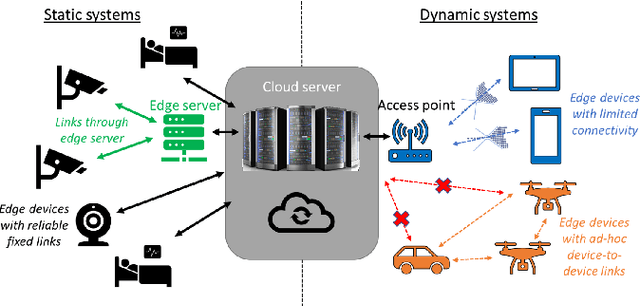

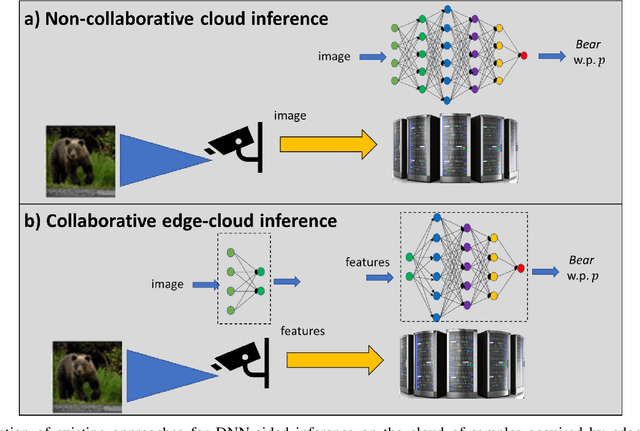

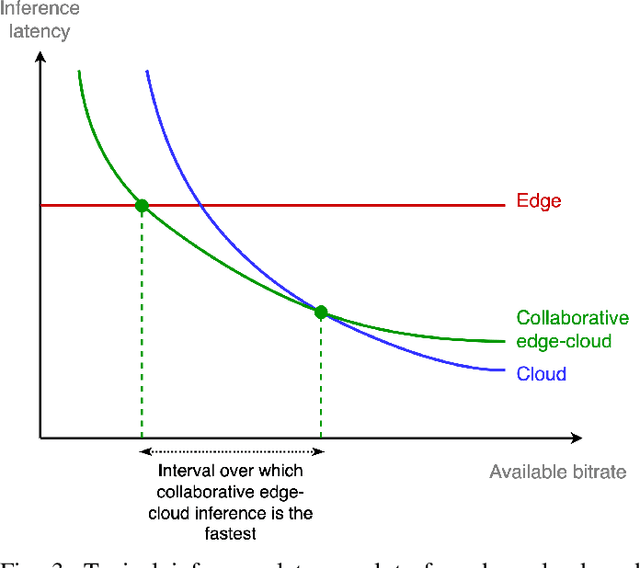

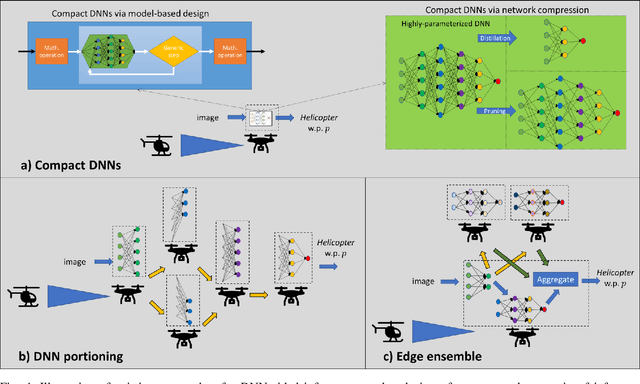

Artificial intelligence (AI) technologies, and particularly deep learning systems, are traditionally the domain of large-scale cloud servers, which have access to high computational and energy resources. Nonetheless, in Internet-of-Things (IoT) networks, the interface with the real-world is carried out using edge devices that are limited in hardware and can communicate. The conventional approach to provide AI processing to data collected by edge devices involves sending samples to the cloud, at the cost of latency, communication, connectivity, and privacy concerns. Consequently, recent years have witnessed a growing interest in enabling AI-aided inference on edge devices by leveraging their communication capabilities to establish collaborative inference. This article reviews candidate strategies for facilitating the transition of AI to IoT devices via collaboration. We identify the need to operate in different mobility and connectivity constraints as a motivating factor to consider multiple schemes, which can be roughly divided into methods where inference is done remotely, i.e., on the cloud, and those that infer on the edge. We identify the key characteristics of each strategy in terms of inference accuracy, communication latency, privacy, and connectivity requirements, providing a systematic comparison between existing approaches. We conclude by presenting future research challenges and opportunities arising from the concept of collaborative inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge