Cold Posteriors Improve Bayesian Medical Image Post-Processing

Paper and Code

Jul 12, 2021

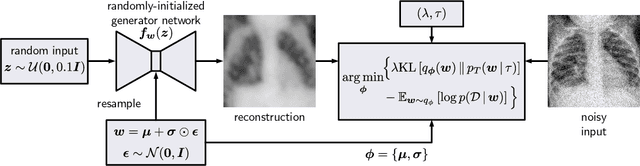

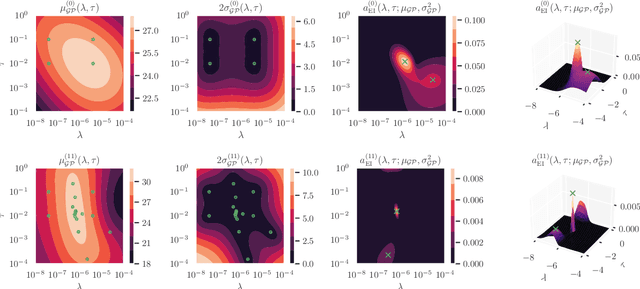

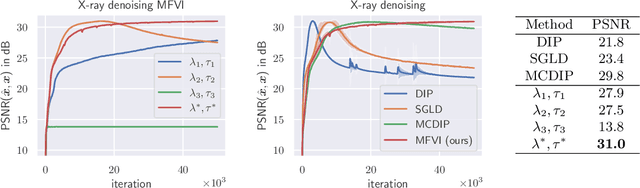

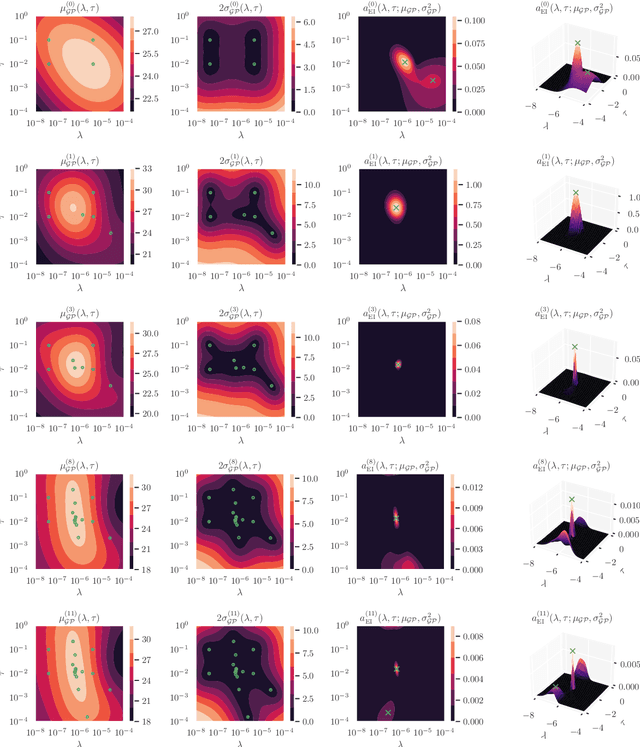

Cold posteriors have been reported to perform better in practice in the context of Bayesian deep learning (Wenzel et al., 2020). In variational inference, it is common to employ only a partially tempered posterior by scaling the complexity term in the log-evidence lower bound (ELBO). In this work, we optimize the ELBO for a fully tempered posterior in mean-field variational inference and use Bayesian optimization to automatically find the optimal posterior temperature and prior scale. Choosing an appropriate posterior temperature leads to better predictive performance and improved uncertainty calibration, which we demonstrate for the task of denoising medical X-ray images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge