CoCoG-2: Controllable generation of visual stimuli for understanding human concept representation

Paper and Code

Jul 20, 2024

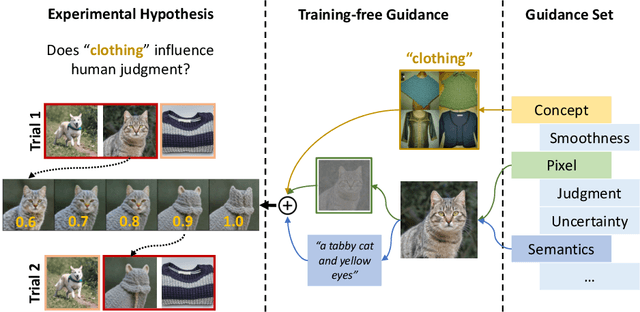

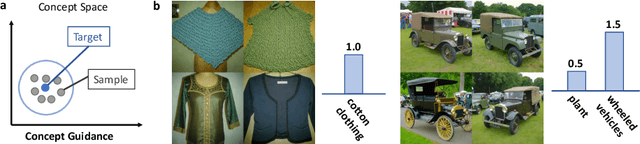

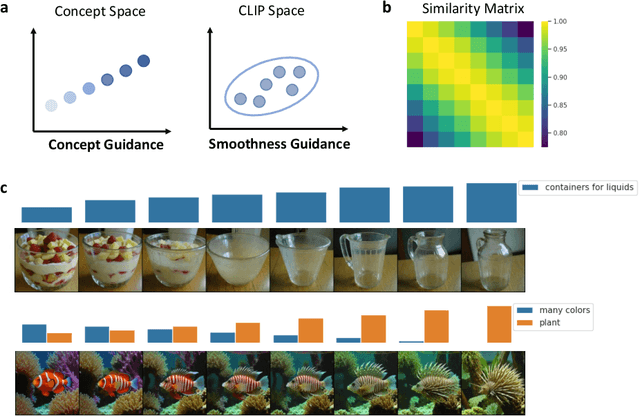

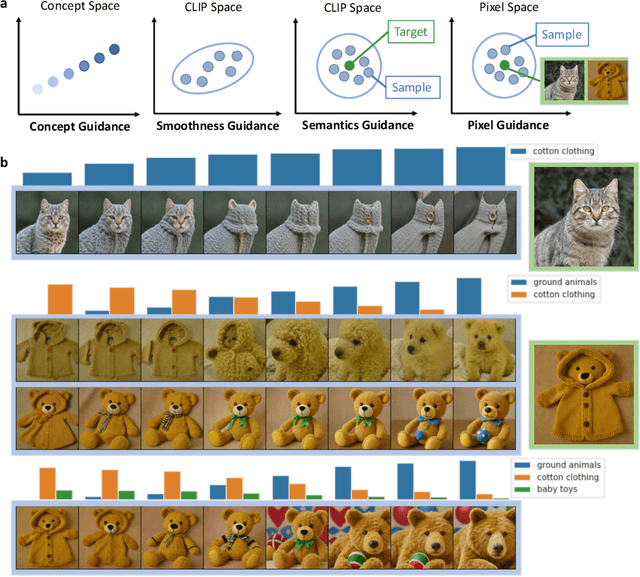

Humans interpret complex visual stimuli using abstract concepts that facilitate decision-making tasks such as food selection and risk avoidance. Similarity judgment tasks are effective for exploring these concepts. However, methods for controllable image generation in concept space are underdeveloped. In this study, we present a novel framework called CoCoG-2, which integrates generated visual stimuli into similarity judgment tasks. CoCoG-2 utilizes a training-free guidance algorithm to enhance generation flexibility. CoCoG-2 framework is versatile for creating experimental stimuli based on human concepts, supporting various strategies for guiding visual stimuli generation, and demonstrating how these stimuli can validate various experimental hypotheses. CoCoG-2 will advance our understanding of the causal relationship between concept representations and behaviors by generating visual stimuli. The code is available at \url{https://github.com/ncclab-sustech/CoCoG-2}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge