Coarse to Fine Multi-Resolution Temporal Convolutional Network

Paper and Code

May 23, 2021

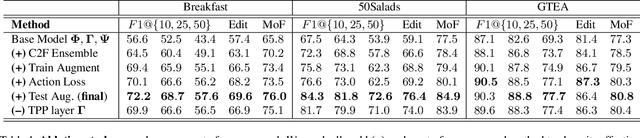

Temporal convolutional networks (TCNs) are a commonly used architecture for temporal video segmentation. TCNs however, tend to suffer from over-segmentation errors and require additional refinement modules to ensure smoothness and temporal coherency. In this work, we propose a novel temporal encoder-decoder to tackle the problem of sequence fragmentation. In particular, the decoder follows a coarse-to-fine structure with an implicit ensemble of multiple temporal resolutions. The ensembling produces smoother segmentations that are more accurate and better-calibrated, bypassing the need for additional refinement modules. In addition, we enhance our training with a multi-resolution feature-augmentation strategy to promote robustness to varying temporal resolutions. Finally, to support our architecture and encourage further sequence coherency, we propose an action loss that penalizes misclassifications at the video level. Experiments show that our stand-alone architecture, together with our novel feature-augmentation strategy and new loss, outperforms the state-of-the-art on three temporal video segmentation benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge