Closing the Dequantization Gap: PixelCNN as a Single-Layer Flow

Paper and Code

Feb 06, 2020

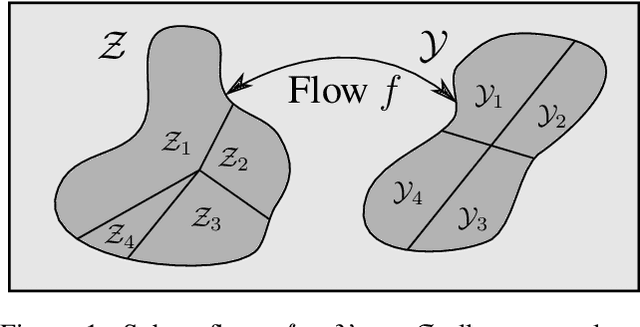

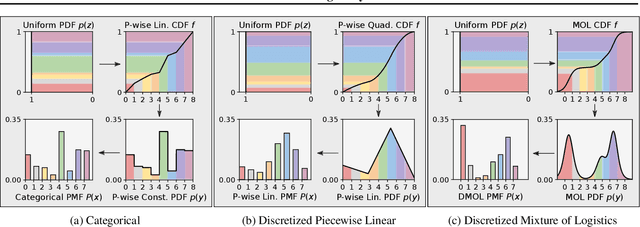

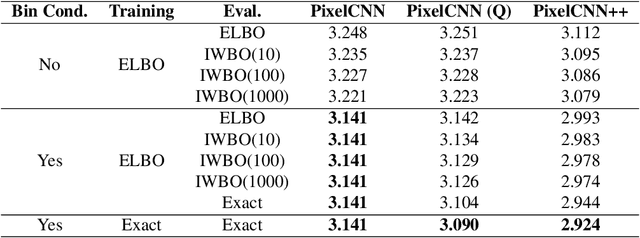

Flow models have recently made great progress at modeling quantized sensor data such as images and audio. Due to the continuous nature of flow models, dequantization is typically applied when using them for such quantized data. In this paper, we propose subset flows, a class of flows which can tractably transform subsets of the input space in one pass. As a result, they can be applied directly to quantized data without the need for dequantization. Based on this class of flows, we present a novel interpretation of several existing autoregressive models, including WaveNet and PixelCNN, as single-layer flow models defined through an invertible transformation between uniform noise and data samples. This interpretation suggests that these existing models, 1) admit a latent representation of data and 2) can be stacked in multiple flow layers. We demonstrate this by exploring the latent space of a PixelCNN and by stacking PixelCNNs in multiple flow layers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge