Closed-loop deep learning: generating forward models with back-propagation

Paper and Code

Jan 13, 2020

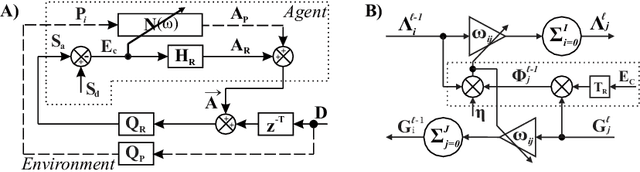

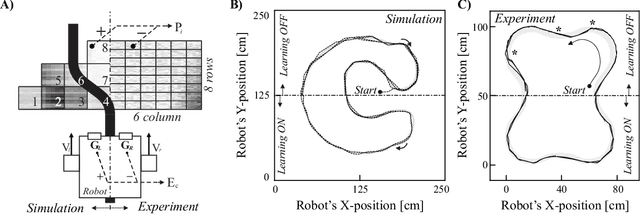

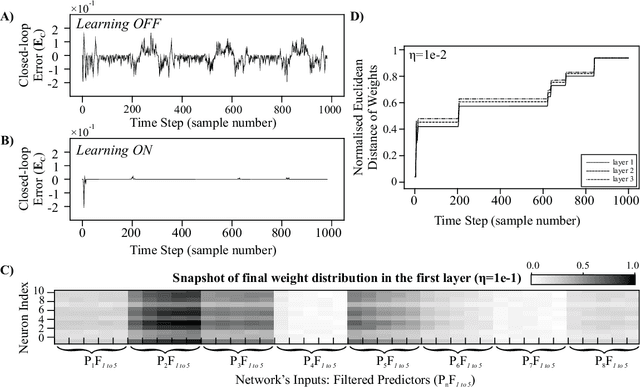

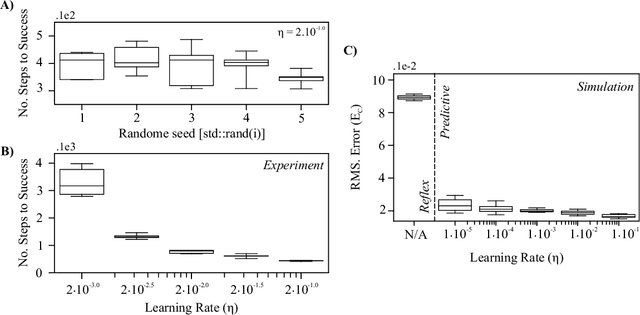

A reflex is a simple closed loop control approach which tries to minimise an error but fails to do so because it will always react too late. An adaptive algorithm can use this error to learn a forward model with the help of predictive cues. For example a driver learns to improve their steering by looking ahead to avoid steering in the last minute. In order to process complex cues such as the road ahead deep learning is a natural choice. However, this is usually only achieved indirectly by employing deep reinforcement learning having a discrete state space. Here, we show how this can be directly achieved by embedding deep learning into a closed loop system and preserving its continuous processing. We show specifically how error back-propagation can be achieved in z-space and in general how gradient based approaches can be analysed in such closed loop scenarios. The performance of this learning paradigm is demonstrated using a line-follower both in simulation and on a real robot that show very fast and continuous learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge