CLAMGen: Closed-Loop Arm Motion Generation via Multi-view Vision-Based RL

Paper and Code

Mar 24, 2021

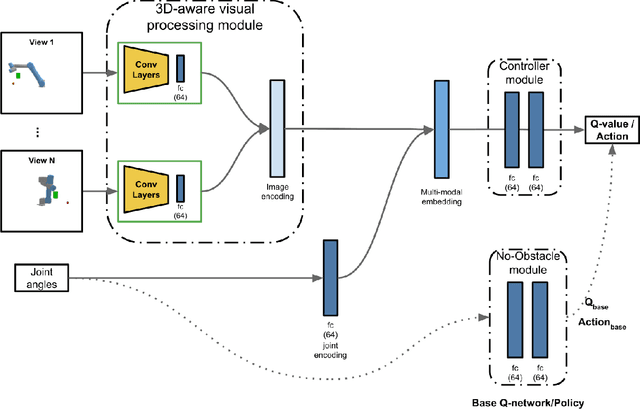

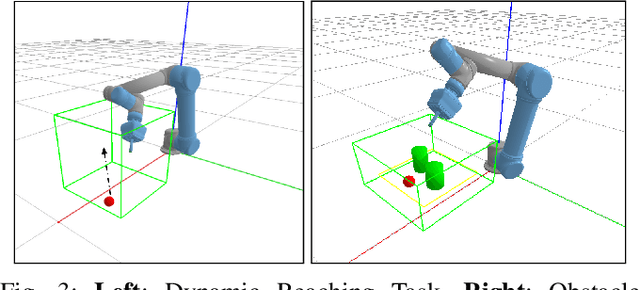

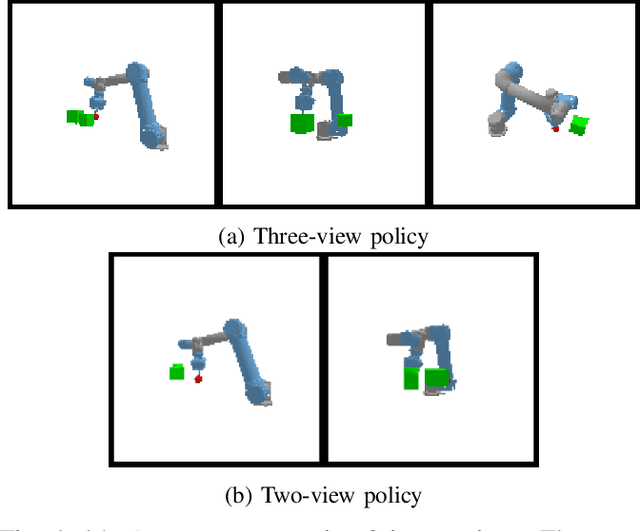

We propose a vision-based reinforcement learning (RL) approach for closed-loop trajectory generation in an arm reaching problem. Arm trajectory generation is a fundamental robotics problem which entails finding collision-free paths to move the robot's body (e.g. arm) in order to satisfy a goal (e.g. place end-effector at a point). While classical methods typically require the model of the environment to solve a planning, search or optimization problem, learning-based approaches hold the promise of directly mapping from observations to robot actions. However, learning a collision-avoidance policy using RL remains a challenge for various reasons, including, but not limited to, partial observability, poor exploration, low sample efficiency, and learning instabilities. To address these challenges, we present a residual-RL method that leverages a greedy goal-reaching RL policy as the base to improve exploration, and the base policy is augmented with residual state-action values and residual actions learned from images to avoid obstacles. Further more, we introduce novel learning objectives and techniques to improve 3D understanding from multiple image views and sample efficiency of our algorithm. Compared to RL baselines, our method achieves superior performance in terms of success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge