CIKM AnalytiCup 2017 Lazada Product Title Quality Challenge An Ensemble of Deep and Shallow Learning to predict the Quality of Product Titles

Paper and Code

Apr 01, 2018

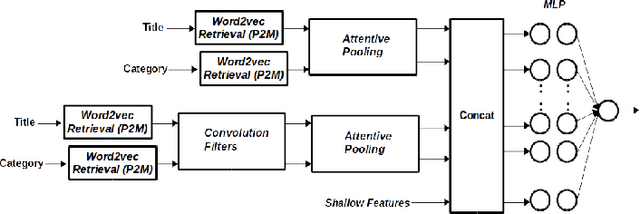

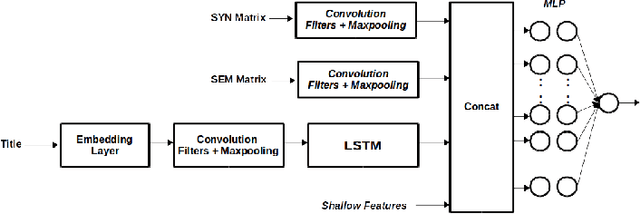

We present an approach where two different models (Deep and Shallow) are trained separately on the data and a weighted average of the outputs is taken as the final result. For the Deep approach, we use different combinations of models like Convolution Neural Network, pretrained word2vec embeddings and LSTMs to get representations which are then used to train a Deep Neural Network. For Clarity prediction, we also use an Attentive Pooling approach for the pooling operation so as to be aware of the Title-Category pair. For the shallow approach, we use boosting technique LightGBM on features generated using title and categories. We find that an ensemble of these approaches does a better job than using them alone suggesting that the results of the deep and shallow approach are highly complementary

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge