CI-RKM: A Class-Informed Approach to Robust Restricted Kernel Machines

Paper and Code

Apr 12, 2025

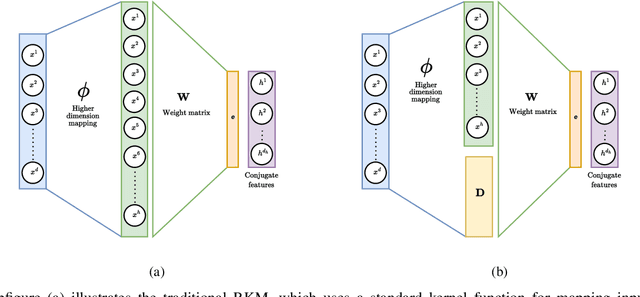

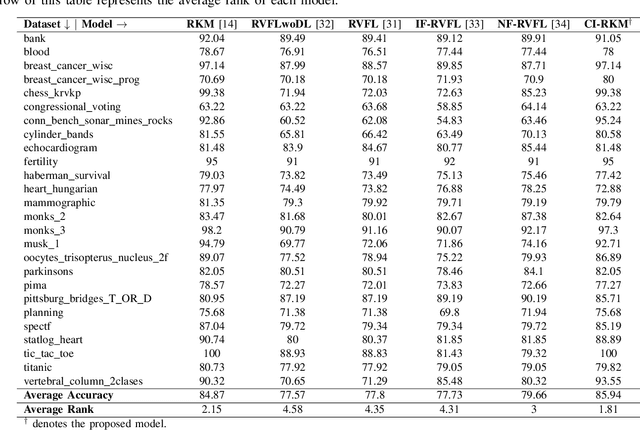

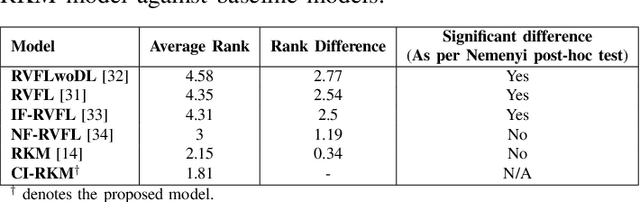

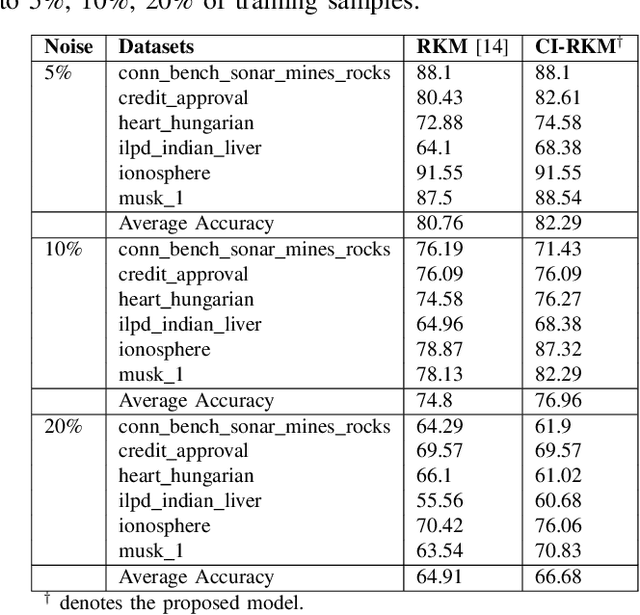

Restricted kernel machines (RKMs) represent a versatile and powerful framework within the kernel machine family, leveraging conjugate feature duality to address a wide range of machine learning tasks, including classification, regression, and feature learning. However, their performance can degrade significantly in the presence of noise and outliers, which compromises robustness and predictive accuracy. In this paper, we propose a novel enhancement to the RKM framework by integrating a class-informed weighted function. This weighting mechanism dynamically adjusts the contribution of individual training points based on their proximity to class centers and class-specific characteristics, thereby mitigating the adverse effects of noisy and outlier data. By incorporating weighted conjugate feature duality and leveraging the Schur complement theorem, we introduce the class-informed restricted kernel machine (CI-RKM), a robust extension of the RKM designed to improve generalization and resilience to data imperfections. Experimental evaluations on benchmark datasets demonstrate that the proposed CI-RKM consistently outperforms existing baselines, achieving superior classification accuracy and enhanced robustness against noise and outliers. Our proposed method establishes a significant advancement in the development of kernel-based learning models, addressing a core challenge in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge