ChemAlgebra: Algebraic Reasoning on Chemical Reactions

Paper and Code

Oct 05, 2022

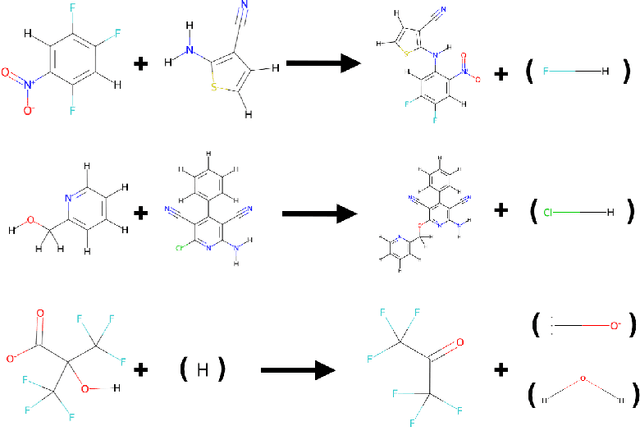

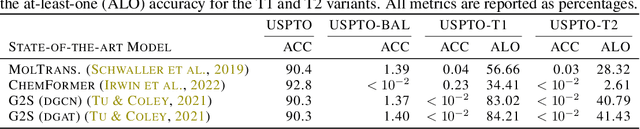

While showing impressive performance on various kinds of learning tasks, it is yet unclear whether deep learning models have the ability to robustly tackle reasoning tasks. than by learning the underlying reasoning process that is actually required to solve the tasks. Measuring the robustness of reasoning in machine learning models is challenging as one needs to provide a task that cannot be easily shortcut by exploiting spurious statistical correlations in the data, while operating on complex objects and constraints. reasoning task. To address this issue, we propose ChemAlgebra, a benchmark for measuring the reasoning capabilities of deep learning models through the prediction of stoichiometrically-balanced chemical reactions. ChemAlgebra requires manipulating sets of complex discrete objects -- molecules represented as formulas or graphs -- under algebraic constraints such as the mass preservation principle. We believe that ChemAlgebra can serve as a useful test bed for the next generation of machine reasoning models and as a promoter of their development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge