Cervical Optical Coherence Tomography Image Classification Based on Contrastive Self-Supervised Texture Learning

Paper and Code

Aug 11, 2021

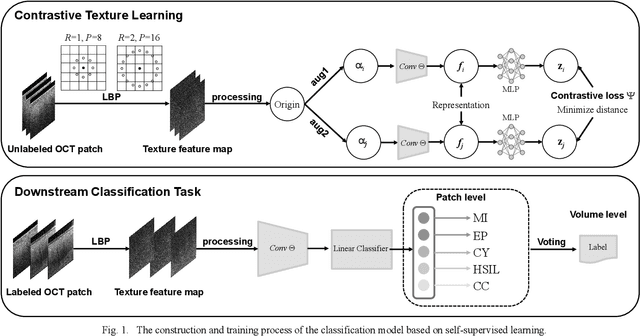

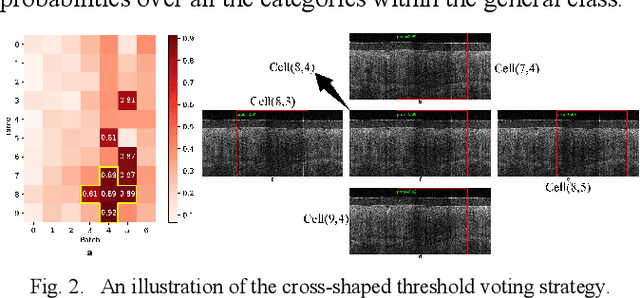

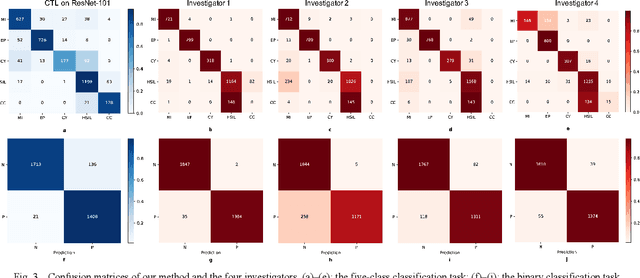

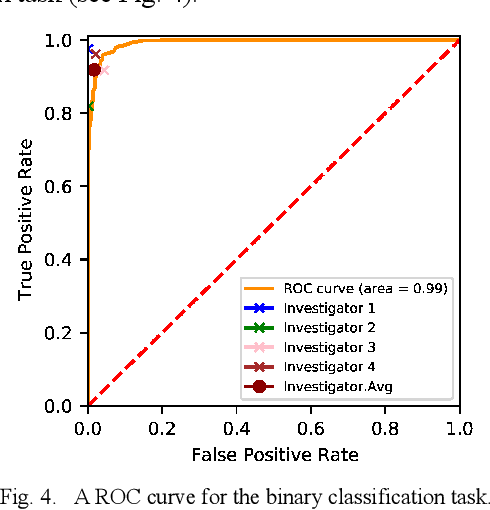

Background: Cervical cancer seriously affects the health of the female reproductive system. Optical coherence tomography (OCT) emerges as a non-invasive, high-resolution imaging technology for cervical disease detection. However, OCT image annotation is knowledge-intensive and time-consuming, which impedes the training process of deep-learning-based classification models. Objective: This study aims to develop a computer-aided diagnosis (CADx) approach to classifying in-vivo cervical OCT images based on self-supervised learning. Methods: Besides high-level semantic features extracted by a convolutional neural network (CNN), the proposed CADx approach leverages unlabeled cervical OCT images' texture features learned by contrastive texture learning. We conducted ten-fold cross-validation on the OCT image dataset from a multi-center clinical study on 733 patients from China. Results: In a binary classification task for detecting high-risk diseases, including high-grade squamous intraepithelial lesion (HSIL) and cervical cancer, our method achieved an area-under-the-curve (AUC) value of 0.9798 Plus or Minus 0.0157 with a sensitivity of 91.17 Plus or Minus 4.99% and a specificity of 93.96 Plus or Minus 4.72% for OCT image patches; also, it outperformed two out of four medical experts on the test set. Furthermore, our method achieved a 91.53% sensitivity and 97.37% specificity on an external validation dataset containing 287 3D OCT volumes from 118 Chinese patients in a new hospital using a cross-shaped threshold voting strategy. Conclusion: The proposed contrastive-learning-based CADx method outperformed the end-to-end CNN models and provided better interpretability based on texture features, which holds great potential to be used in the clinical protocol of "see-and-treat."

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge