Certified Robust Invariant Polytope Training in Neural Controlled ODEs

Paper and Code

Aug 02, 2024

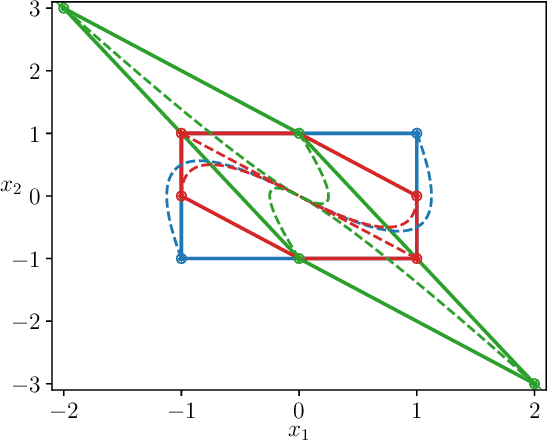

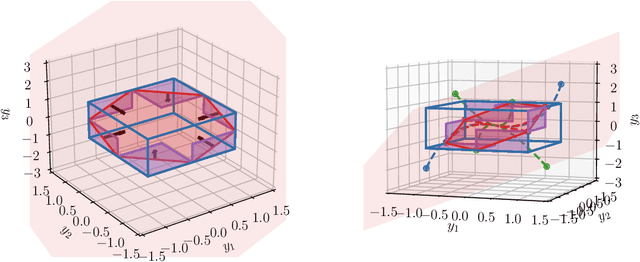

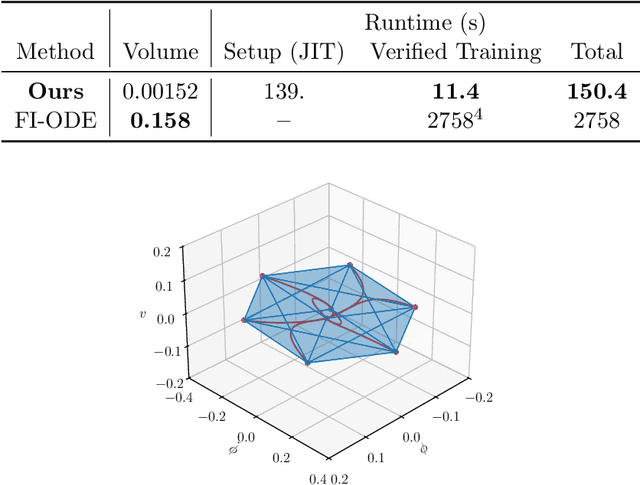

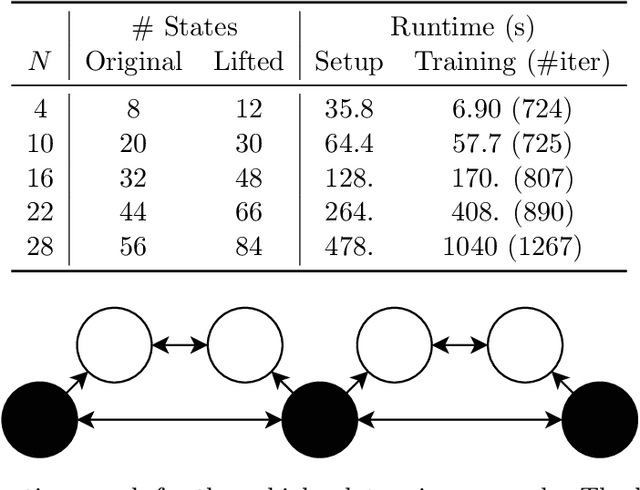

We consider a nonlinear control system modeled as an ordinary differential equation subject to disturbance, with a state feedback controller parameterized as a feedforward neural network. We propose a framework for training controllers with certified robust forward invariant polytopes, where any trajectory initialized inside the polytope remains within the polytope, regardless of the disturbance. First, we parameterize a family of lifted control systems in a higher dimensional space, where the original neural controlled system evolves on an invariant subspace of each lifted system. We use interval analysis and neural network verifiers to further construct a family of lifted embedding systems, carefully capturing the knowledge of this invariant subspace. If the vector field of any lifted embedding system satisfies a sign constraint at a single point, then a certain convex polytope of the original system is robustly forward invariant. Treating the neural network controller and the lifted system parameters as variables, we propose an algorithm to train controllers with certified forward invariant polytopes in the closed-loop control system. Through two examples, we demonstrate how the simplicity of the sign constraint allows our approach to scale with system dimension to over $50$ states, and outperform state-of-the-art Lyapunov-based sampling approaches in runtime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge