CellSeg1: Robust Cell Segmentation with One Training Image

Paper and Code

Dec 02, 2024

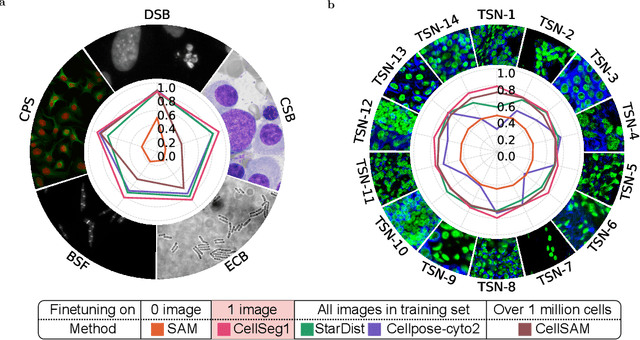

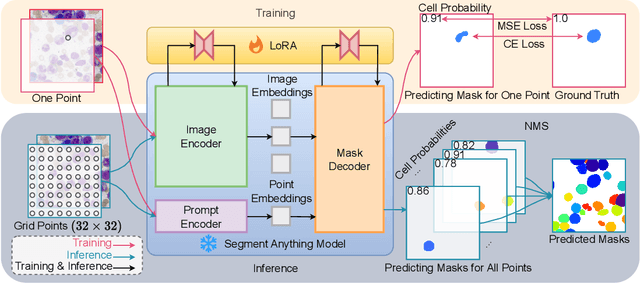

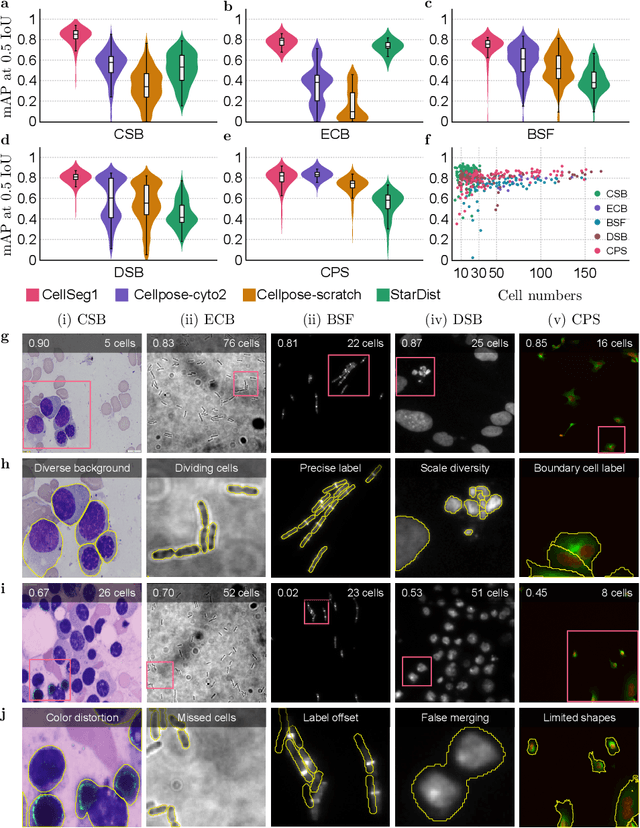

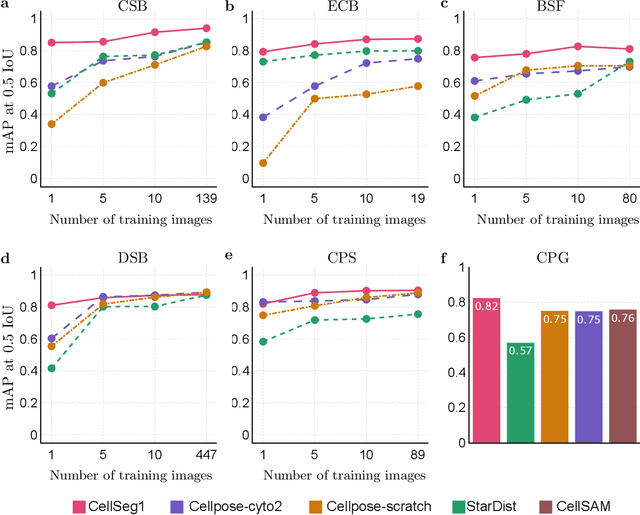

Recent trends in cell segmentation have shifted towards universal models to handle diverse cell morphologies and imaging modalities. However, for continuously emerging cell types and imaging techniques, these models still require hundreds or thousands of annotated cells for fine-tuning. We introduce CellSeg1, a practical solution for segmenting cells of arbitrary morphology and modality with a few dozen cell annotations in 1 image. By adopting Low-Rank Adaptation of the Segment Anything Model (SAM), we achieve robust cell segmentation. Tested on 19 diverse cell datasets, CellSeg1 trained on 1 image achieved 0.81 average mAP at 0.5 IoU, performing comparably to existing models trained on over 500 images. It also demonstrated superior generalization in cross-dataset tests on TissueNet. We found that high-quality annotation of a few dozen densely packed cells of varied sizes is key to effective segmentation. CellSeg1 provides an efficient solution for cell segmentation with minimal annotation effort.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge