CECILIA: Comprehensive Secure Machine Learning Framework

Paper and Code

Feb 07, 2022

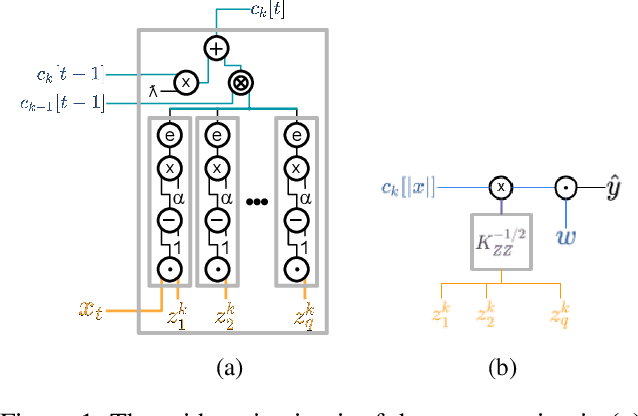

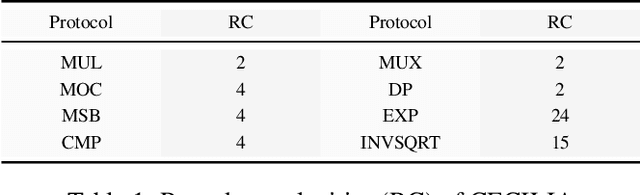

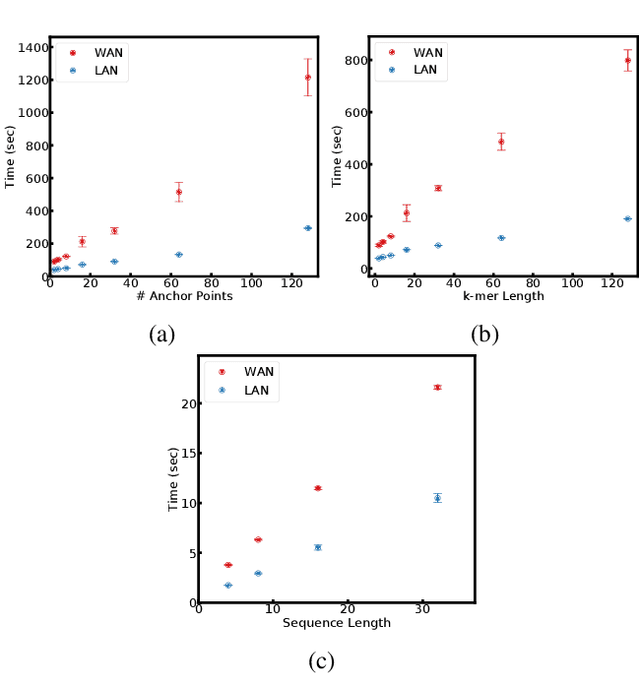

Since machine learning algorithms have proven their success in data mining tasks, the data with sensitive information enforce privacy preserving machine learning algorithms to emerge. Moreover, the increase in the number of data sources and the high computational power required by those algorithms force individuals to outsource the training and/or the inference of a machine learning model to the clouds providing such services. To address this dilemma, we propose a secure 3-party computation framework, CECILIA, offering privacy preserving building blocks to enable more complex operations privately. Among those building blocks, we have two novel methods, which are the exact exponential of a public base raised to the power of a secret value and the inverse square root of a secret Gram matrix. We employ CECILIA to realize the private inference on pre-trained recurrent kernel networks, which require more complex operations than other deep neural networks such as convolutional neural networks, on the structural classification of proteins as the first study ever accomplishing the privacy preserving inference on recurrent kernel networks. The results demonstrate that we perform the exact and fully private exponential computation, which is done by approximation in the literature so far. Moreover, we can also perform the exact inverse square root of a secret Gram matrix computation up to a certain privacy level, which has not been addressed in the literature at all. We also analyze the scalability of CECILIA to various settings on a synthetic dataset. The framework shows a great promise to make other machine learning algorithms as well as further computations privately computable by the building blocks of the framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge