Can Requirements Engineering Support Explainable Artificial Intelligence? Towards a User-Centric Approach for Explainability Requirements

Paper and Code

Jun 03, 2022

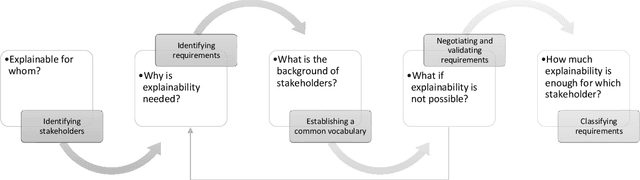

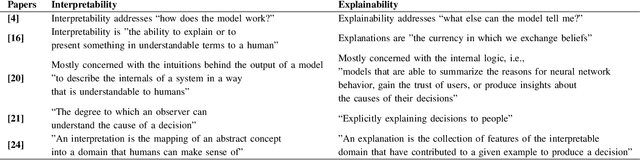

With the recent proliferation of artificial intelligence systems, there has been a surge in the demand for explainability of these systems. Explanations help to reduce system opacity, support transparency, and increase stakeholder trust. In this position paper, we discuss synergies between requirements engineering (RE) and Explainable AI (XAI). We highlight challenges in the field of XAI, and propose a framework and research directions on how RE practices can help to mitigate these challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge