Can No-reference features help in Full-reference image quality estimation?

Paper and Code

Mar 02, 2022

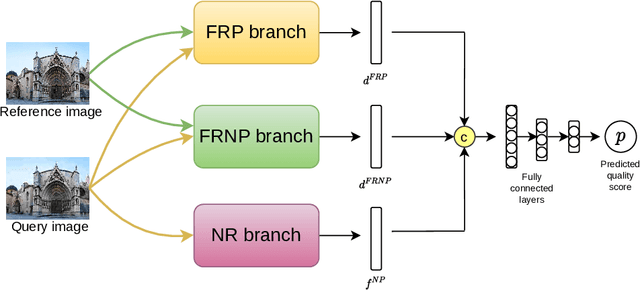

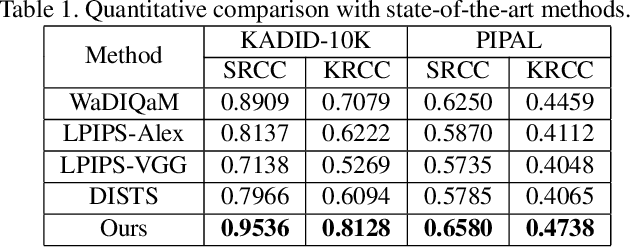

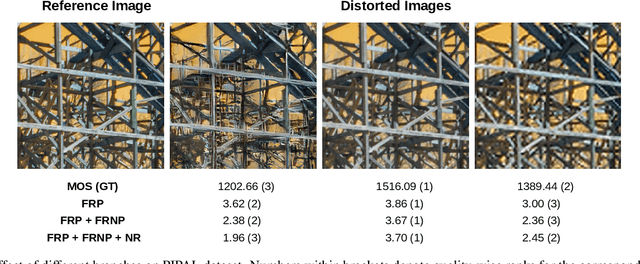

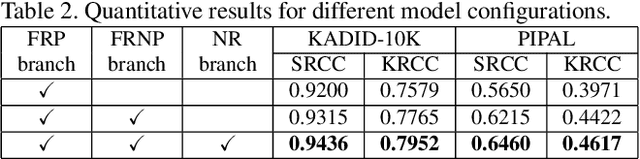

Development of perceptual image quality assessment (IQA) metrics has been of significant interest to computer vision community. The aim of these metrics is to model quality of an image as perceived by humans. Recent works in Full-reference IQA research perform pixelwise comparison between deep features corresponding to query and reference images for quality prediction. However, pixelwise feature comparison may not be meaningful if distortion present in query image is severe. In this context, we explore utilization of no-reference features in Full-reference IQA task. Our model consists of both full-reference and no-reference branches. Full-reference branches use both distorted and reference images, whereas No-reference branch only uses distorted image. Our experiments show that use of no-reference features boosts performance of image quality assessment. Our model achieves higher SRCC and KRCC scores than a number of state-of-the-art algorithms on KADID-10K and PIPAL datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge